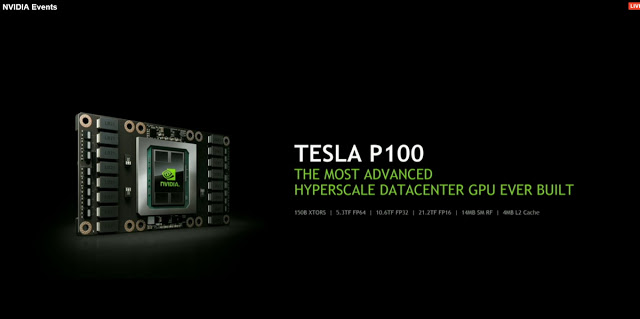

The Nvidia Tesla P100 is the first full-size Nvidia GPU based on the TSMC 16nm FinFET manufacturing process—like AMD, Nvidia has been stuck using an older 28nm process since 2012—and the first to feature the second generation of High Bandwidth Memory (HBM2).

The chip has 15 billion transistors, or three times as much as many processors or graphics chips on the market. It takes up 600 square millimeters. The chip can run at 21.2 teraflops

The P100 reaches 21.2 teraflops of half-precision (FP16) floating point performance, 10.6 teraflops of single precision (FP32), and 5.3 teraflops (1/2 rate) of double precision. By comparison, the Titan X and Tesla M40 offer just 7 teraflops of single precision floating point performance.

Nvidia has also built a 170-teraflop DGX-1 supercomputer using the Tesla P100 chip.

Memory bandwidth more than doubles over the Titan X to 720GB/s thanks to the wider 4096-bit memory bus, while capacity goes up to 16GB. Interestingly, the Tesla P100 isn’t even a fully-enabled version of Pascal; it’s based on the company’s new GP100 GPU, with 56 of its 60 streaming multiprocessors (SM) enabled. The GP100 die, with a surface area of 610 square millimetres, is roughly the same size as the GM200 Titan X. Rather than shrink down the die thanks to the smaller 16nm process, Nvidia has instead chosen to simply fill the same space up with a lot more transistors—15.3 billion of them to be precise—almost doubling that of the top-end GM200 Maxwell chip.

The NVIDIA® DGX-1™ is the world’s first purpose-built system for deep learning with fully integrated hardware and software that can be deployed quickly and easily. Its revolutionary performance significantly accelerates training time, making the NVIDIA DGX-1 the world’s first deep learning supercomputer in a box.

OEM Tesla P100-equipped systems not set to ship until Q1 of 2017, for the next couple of quarters the DGX-1 will be the only way for customers to get their hands on a P100. The systems will be production quality, but they are nonetheless initially targeted at early adopters who want/need P100 access as soon as possible, with a price tag to match: $129,000.

Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology.

Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels.

A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.