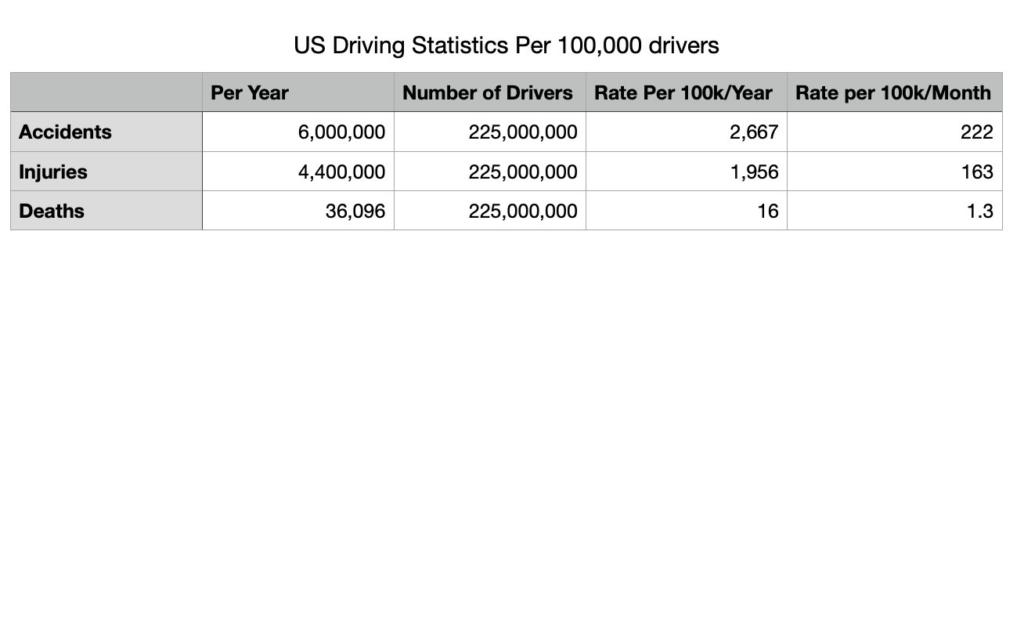

Tesla Full Self Driving beta is being used by over 100,000 people. Normally 100,000 US drivers have 12.4 fatal accidents each year. This is over one fatality per month. Normally 100,000 drivers would have 163 accidents with injuries each month. There are so far no reported injury or fatality accidents after over four months of 60,000+ users and a month or so of over 100,000 users.

Human driving is the problem. ADAS makes driving safer. 100,000 people are using FSD beta, there have been zero accidents that involve injuries/death. Statistically in the US, the average 100,000 drivers have 222 accidents, with 163 injuries/1.2 deaths each month. pic.twitter.com/YJ8ybGBjeS

— Alan Dail⚡️🔋🇺🇦 (@alandail) April 20, 2022

SOURCES – National Highway Traffic Safety Administration

Written by Brian Wang, Nextbigfuture.com (Brian owns shares of Tesla)

Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology.

Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels.

A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.

While I'm not saying autopilot is not safer than no autopilot, there are many confounding factors that must be taken into consideration before judgement.

Tesla's are very crashworthy, particularly those with structural battery packs, and front, and rear end castings, the pickups will be more so. They have lower center of gravity, so it's more difficult to roll them. It's nearly impossible to go into a skid, unless the car is travelling sideways.

Now, this will trigger the woke among us. Teslas are disproportionately owned by "white males" over 30 with a good education, and "responsible" job, or that are retired. This demographic is much less likely to crash than others. Few people learn to drive using a Tesla at any age.

That may well be true, but even if you control for demographics, you still get a big difference.

Looking at accidents among Tesla drivers, you see significantly lower figure for accidents that took place with on autopilot or full self-driving, than when drivers were driving manually.

No,of course not,Tesla has fatalities regularly and they always disengage any systems a micro second before impact to game the numbers.

From Tesla,

To ensure our statistics are conservative, we count any crash in which Autopilot was deactivated within 5 seconds before impact, and we count all crashes in which the incident alert indicated an airbag or other active restraint deployed.

Tesla was already voluntarily doing this. I believe that there is a move by regulators to (CA or NTSB?) require reporting of accidents within a longer time period of disengagement.

There are more fatalities with FSD than without, it is very dangerous ad obviously has nothing to do with autonomy, because unlike Cruise Origen,there is a steering wheel and the equiremt of a squishy human with insurance.

Boring Co is incapable of using FSD to navigate a tunnels it is far from being an autonomous sytem,more like a religious donation to th leader.

Will be interesting to follow GM Cruise and the Origin autonomous vehicle,unlike FSD,it requires no human in the vehicle.

Assuming GM can scale production of th Origin vehicle what will that be worth to GM,one T?

Please supply a source indicating a fatality with FSD. Not autopilot.

Tesla FSD is perfectly capable of driving in the Boring Co tunnel, as is autopilot but they are not yet approved for that by regulators. FSD is currently not able to reliably handle the unpredictable station environment where there is a complex mix of vehicles and pedestrians loading and unloading.

The most you can say at this point is that the data isn't inconsistent with being safer than human.

Pretty much this. Since Tesla selected drivers based on their superior "Safety Score", comparing this sample population to the average US population is meaningless. This article is a complete failure in critical thinking.

Because environmental factors are a hidden variable in safety score, you can't even use interventions as a metric. A person who drives down empty roads in the middle of nowhere will have a higher safety score than the same person driving in downtown LA. Therefore, unless controlled for, the sample size of drivers will be likely experiencing less challenging driving environments than the average driver.

The only conclusions you can make at the moment is that it hasn't made safe drivers noticeably worse.

Yep, my thoughts exactly

Selection bias

Is the Tesla claim of zero injuries and deaths reliable?

I just looked up the 2019 statistics here in Sweden.

Here we have about:

4 deaths per 100000 cars and year.

318 injured per 100000 cars and year.

35 seriously injured per 100000 cars and year.

In 2021, there were a record low 192 fatalities. Of these, 115 were sitting in cars, trucks etc. and 77 were unprotected (bikes, walkers, motor bikes etc.).

I think these numbers may be "best in class" for the civilized world. The numbers continue to improve every year and can be interesting in this context to show how far you can get with old fashioned human drivers and no AI self driving.

Sweden has worked systematically since 1997 (following a parliamentary decision) on addressing the top causes for traffic accidents. The goal was to half the fatalities/injuries between 2007 – 2020 and this goal has been exceeded.

(continued from above)

The biggest barrier to adoption of self driving cars probably will not be making self driving work. It probably will be to get past the human inability to rationally evaluate how safe self driving cars are. Self driving cars will never be perfect. The trouble is that they will make different mistakes than humans will make. Sensationalist media and predatory lawyers will capitalize on those different mistakes and make tons of undeserved trouble for the self driving car manufacturers.

The self driving car manufacturers need to engage in a large and continuing effort to promote the ideas that self driving cars cannot be perfect, but they can have better overall safety records than human driven cars. That self driving cars will make different mistakes than human drivers make, and while that can make the self driving cars look silly when comparing some incidents to what a human driver would do, it does not make self driving cars less safe than human driven cars, over all (as long as the overall safety record of the self driving cars is better than the overall safety record of the human driven cars).

I am not claiming that self driving cars have reached the point of a better safety record yet. I don't have enough data to determine that. But I do believe they are very close and will soon reach that safety level. But it won't matter unless the public attitude is educated to evaluate the safety rationally.

Great point. It will be little solace that autonomous cars are legitimately 5x, 10x or 50x safer than human drivers if it’s your loved one that gets run over.

There is also the concern (certainly not the only one) that reckless human drivers will be even more reckless if they know with certainty that the autonomous car will yield every time.

My solution – Allow car video and telemetry to be used to cite reckless drivers. Imagine if someone was menacing you or cut you off and you could submit the video and telemetry by pushing a button. If the evidence proved culpability the person would get a citation. People would feel less urge to road rage in response if they knew there would be consequences for the other driver.

Well, that recent incident of a Tesla barging into a Cirrus Vision Jet on an airport tarmac during a smart summon makes me think there's still some major bugs to be worked out…

https://www.theverge.com/2022/4/22/23037654/tesla-crash-private-jet-reddit-video-smart-summon

A good omen but not quite there yet. If we just followed the ultra responsible drivers Tesla selected to let into FSD beta – they’d likely have far fewer accidents than average too even without extra awareness monitoring FSD. Maybe if there are almost no interventions but we don’t know that rate.

For now, it's hard to call it "less safe" if there are zero accidents. You might argue (and it's a valid argument) that we should know how many man-hours of enabled FSD are logged before the comparison can be considered trustworthy, but right now it's strictly true that FSD is safer than manual driving.

But these 100k FSD users probably do not use FSD every time they go for a drive, right? So it's hard to say if it safer or not. If it were only used in 1% of the time, then the answer would probably be less safe than human drivers…?

I wonder, is it the ADAS, the 100,000 drivers selected to beta test, or the negative feedback they get if they drive in an other than a safe manner?

That is only as long as it is not distracted by Twitter texting.