A team of researchers from China used the Sunway supercomputer to train an AI model with 174 trillion parameters called ‘bagualu,’ which means “alchemist’s pot.” The AI parameters are comparable to the number of synapses (1000 trillion in the human brain). However, human synapses and AI parameters are not equivalent.

In 2020, Microsoft trained a natural language model using 17 billion parameters.

In2021, Google announced an AI model trained with 1.6 trillion parameters.

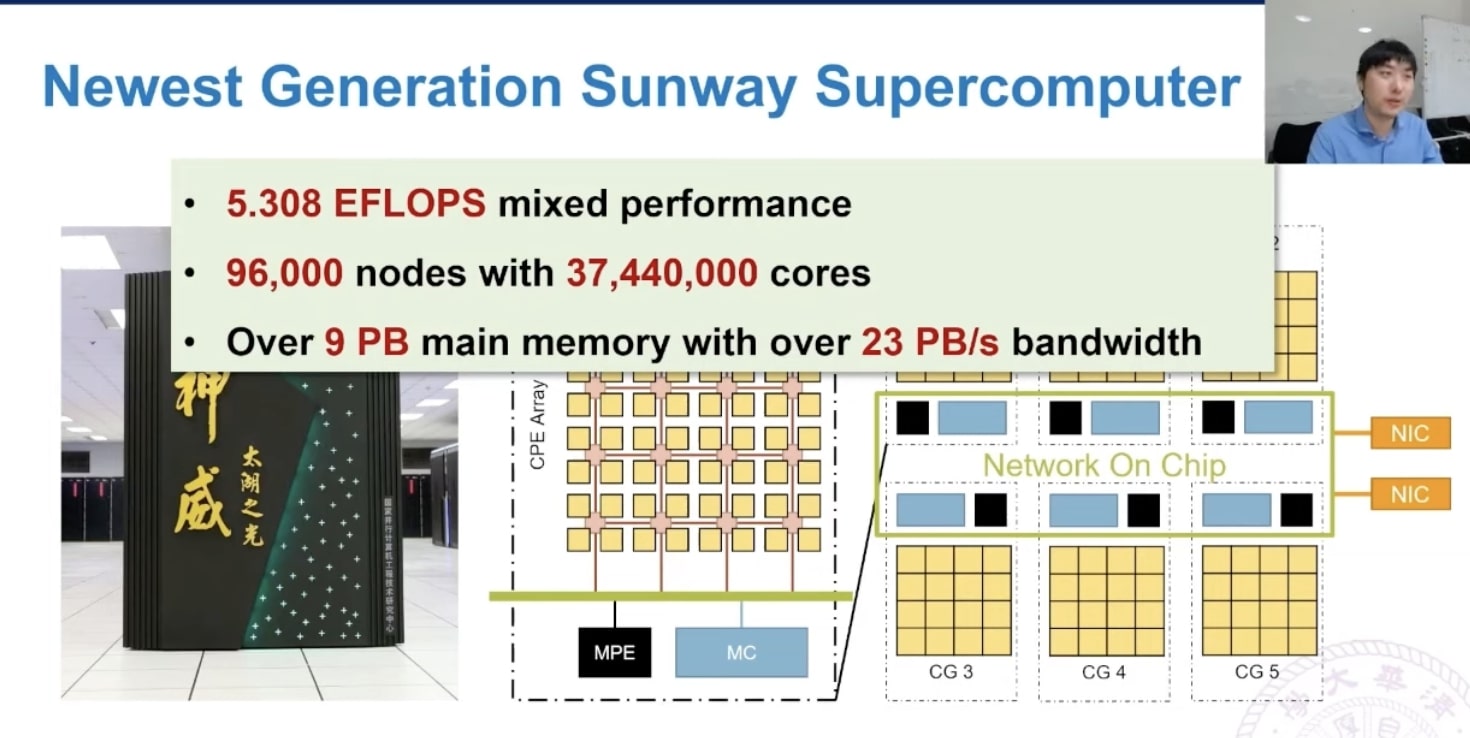

The Sunway supercomputer has a speed of a billion operations per second, or 5.3 floating-point operations per second (exaflops). It is using FP32 single precision operations. According to the researchers, it has 37 million CPU cores — four times as many as Frontier — and nine petabytes of memory. They also claim the 96,000 semi-independent computer systems, called nodes, resemble the power of a human brain. Communications between these nodes take place at a speed of more than 23 petabytes per second.

Here is a research paper describing the pre-training of the BaGuaLu model.

BaGuaLu: Targeting Brain Scale Pretrained Models with over 37 Million Cores

Abstract

Large-scale pretrained AI models have shown state-of-theart accuracy in a series of important applications. As the size of pretrained AI models grows dramatically each year in an effort to achieve higher accuracy, training such models requires massive computing and memory capabilities, which accelerates the convergence of AI and HPC. However, there are still gaps in deploying AI applications on HPC systems, which need application and system co-design based on specific hardware features.

To this end, this paper proposes BaGuaLu, the first work targeting training brain scale models on an entire exascale supercomputer, the New Generation Sunway Supercomputer. By combining hardware-specific intra-node optimization and hybrid parallel strategies, BaGuaLu enables decent performance and scalability on unprecedentedly large models.

The evaluation shows that BaGuaLu can train 14.5-trillion parameter models with a performance of over 1 EFLOPS

using mixed-precision and has the capability to train 174-trillion-parameter models, which rivals the number of synapses in a human brain.

Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology.

Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels.

A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.

AI technology is still very in the early stages of detail understanding. We only have the details architecture and structure of the brain, the neocortex brain and detailed structure of the neurons and the synapse are very complex we need 30 to 50 years to have complete understanding of the neocortex brain.

The parameters you are talking about can be used very high performance graphics processor to emulate.

With the neocortex brain people can detect true and propaganda.

Not bad for 14nm process, which I believe is SOTA in China.

Who Dares Wins.