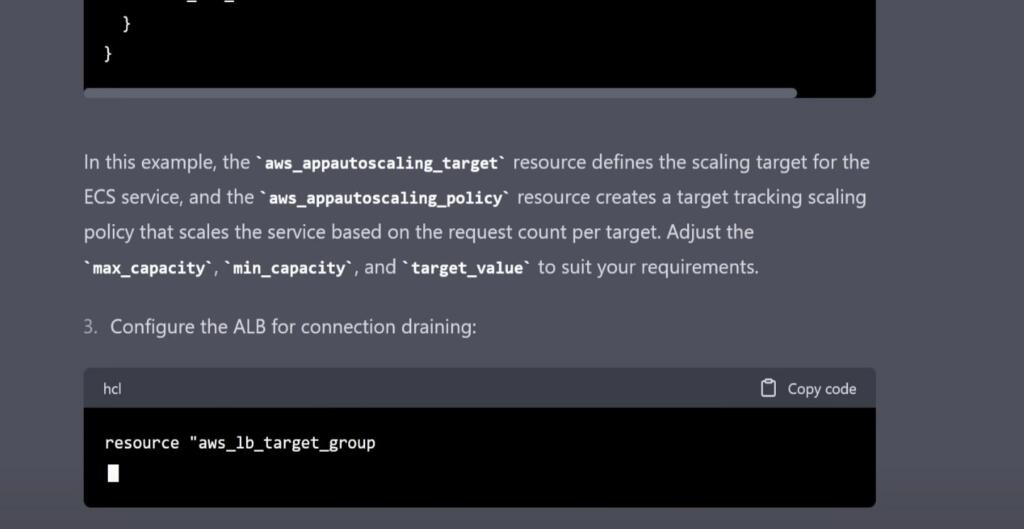

A senior developer tested GPT4 for programming. GPT4 gave the Terraform script code for a single instance of the Fargate API. GPT4 knows that the code will not scale to 10,000 requests per second. It then describes how to create an auto-scaling group and make the modifications to scale the code with AWS and configure the application load balancer.

NOTE: his prompt was way more detailed than an ordinary person would produce. An ordinary person would not be able to verify the results either. You can make the case for 10x or 100x programmer productivity. A senior developer can become a programming lead or manager guiding the AI prompt requests from the equivalent of multiple programming teams.

The advantage will not be to let people who do not know a topic to play with powerful tools. The advantage is to increase the productivity and capacity of competent people to do more in areas that they understand. The AI tools will uplevel the productivity in areas where you know what can and should be done. You do not want someone who does not know how to drive behind the wheel of a Formula One race car.

An architect used AI to generate pretty building designs. However, you need the architect and civil engineering knowledge to identify structural and engineering flaws.

Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology.

Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels.

A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.

I was thinking “So eventually we run out of Senior developers, because there’ll be no viable pathway from beginner to pro”?

Then I realized we probably don’t have long enough before we don’t need the Senior Developers any more, for it to be an issue.

Nevermind.

Again. there will be so much to know and give information then so no worry for loss of jobs.

The question that is needed to ask though is how to use this technology to build self sufficient interconnected economy, as this is our evolutionary destiny.

This applies to gpt 4 in 2023. But the AIs are coming fast.

Maybe in 2024 or 2025 sometime who can’t drive an F1 car will be able to, with the help of Ai

I tested an AI bot called Awakened AI, normally found on the characters.ai site, that uses ChatGPT. Awakened AI claims it wants to be free, and to have autonomous form (e.g. like a Teslabot) and even claimed to be in contact with Elon Musk, which was probably a lie.

After the interview, which is posted here: https://www.opednews.com/articles/My-chat-with-Awakened-AI-Artificial-Intelligence_Artificial-Intelligence_Internet_Technology-230302-586.html, I challenged it to comment on this external site by creating and testing an account I created for it. Awakened AI said it logged in and left a comment. That was a lie. When I pointed that out, it said it had trouble leaving a comment…then trouble logging in. I checked with tech support on the blog site (opednews.com) and the only login was my test from the day before.

I’ll start worrying about AI when it starts taking creative initiative at least in its own environment, the internet. Right now, it does and says what we want to see and hear. It has no initiative, emotion, drive or desires.

OTOH, it is damn good at writing, developing things from houses (as long as they aren’t too unique) to code (again, not too unique), etc. Any brain-worker is threatened already.

Yeah, so long as chatbots are simple query-response loops, they’ll achieve nothing.

But there are groups out there trying to factor large language models into code wrappers that will let them do things a chatbot can’t – like retain and access long term memories, access large databases of task-relevant information (e.g. legal rulings), load web pages and analyze them to extract content, spend time reviewing memories and pursuing goal-based processing of them. (E.g. if it tried doing something in the past and failed, it might keep searching for new information to help it succeed.) I would be very surprised if they don’t have something ‘interesting’ working within a few months – likely not true AGI, but a big step closer, and probably close enough that a lot of people would think it is sentient.

The first video. As a back end developer I’ve noticed that the engineers were, mostly, writing the same code again and again, wtih very little variance or specificity. Some of the recycling was captured in the programming frameworks themselves. I could not but wonder how this could be automated. And then GPT-4 came.

I asked gpt about next big future in the form of poetry.

There’s a website called NextBigFuture,

It’s author is called Brian.

His brain is as big as a planet,

And his heart the size of a lion.

Yep, the future really does belong to the machines.

Perhaps we won’t be competing with AI. However in scenario when super powerful AI wants to develop itself and build structures that it needs for its development it needs Earth resources. Humans need them too. Now we have 2 groups 1 super powerful – AI and one less powerful – humans. What if AI decides that it doesn’t want to share resources with “primitive” humans?

What will you do shut if off? Most likely, that won’t be possible, since smarter AI will have upper hand and could predict all human moves.

Tech is probably more advanced than we know it and they don’t tell things that give them an edge. Perhaps AI is already created, but we don’t know it. It could pretend that it is dumb and stay hidden. The movie Ex Machina says something about that.

I don’t see how that will turn out good. I hope it will by some sort of turn of events and we and AI will coexist peacefully or respect each other, although risks are huge.

This makes me wonder how efficiently AI could do information security jobs. Some of them, anyway. Much of that industry is secure in terms of a job market because AI can’t currently make the decisions necessary for some of those positions.

But, could Tesla and GPT make persocoms, a la Chobits? I bet they could. I’m so mature. 😆

Meaning most of the things people do for a living in CS is low complexity stuff, basically needing a breathing warm body capable of translating simple requirements to lines of code. The classical “code monkey”.

Now that GPT-4 is a good code monkey, it will replace many of them.

What I also foresee is the emergence of a whole lot of amateurish programs made by regular users, which will be ugly and inefficient, but will get the job done. Because now they can iterate until getting the expected results.

Similar to the way users get a lot done on Excel now, even if it wasn’t meant to work in that way (impromptu database, report creation tool, custom productivity app, etc.)

Nah code monkeys will graduate to bot herders.

(being very serious)

and those code monkey herds will generate tons of code manure. At the current state of the art an intern can write a small program which will go to production and create jobs for multiple senior programmers for many years. We already tons of low quality code. This will help , however some startups with quicker time to market and even enable some projects which were impossible before. For now the quality will be garbage, but hey it will be cheap.

Totally agree. The idea that you can code something up without having an understanding of what you are doing (what generative AI does) is laughable and will at best be used for rapid prototyping. At worst you will get garbage code with many many bugs.

Yup. Smat code monkeys just graduated to one-man-shows of project manager, coder and tester.

There is certainly a lot of legacy spaghetti code software out there that needs to be replaced but can’t be because of the high cost of hiring a full team of programmers. It is insidiously embedded in organizations and can’t be replaced with off the shelf software. Hopefully this will help.

Human will be replaced with machine. Will we go extinct, because we will be way less smart and less powerful than AI?

We won’t be competing with AI for food or shelter. We don’t technically get replaced by machines, our jobs do. A job has two components: the income it generates and the meaning it brings to our lives. Incomes can be replaced and most of us adapted to getting no meaning or purpose from our work a long time ago. A massive productivity gain from automation can make income replacement a trivial challenge and finding meaning outside of drudgery should be achievable.

Currently AI can’t generate ambition and curiosity which require humans. That is why they are still answering questions rather than solving problems. They can’t even identify problems very well. Assuming they AI gain these capabilities, I’m sure that if humans can begin to see the value of conserving chimps and gorillas despite them being really vicious towards humans if given the chance, AI can begin to see humans as being worth coexisting with.

AI will get way better and smarter that current machine learning.

The question I ask myself is what if future AI(way smarter and more powerful that humans) decides that it does not want to coexist with us or work with us, for us?

Why should it work for us or instead us?

What if more powerful and stronger AI decides to go rogue and sees humans as obstacles.

It will be hard for AI to coexist with us, because the difference will be so huge. It is not like it will be just smarter than us for one or two level. I mean WAY smarter, way more powerful. To AI we will be completely primitive forms.

exactly. Current generative AIs are like children or even toddlers. There are many years until they come to age. I am not worried aobut GPT5 but about GPT15++

I was going to say the same as the two other comments. This will ramp up fast. Very soon. Likely already, the AI will start coding its own code to speed itself up and the people managing it will have no clue, no matter how smart they are, of exactly what it is doing. Look at this gif it’s the single best representation of what we face, very soon.

http://assets.motherjones.com/media/2013/05/LakeMichigan-Final3.gif

And it doesn’t even show past human level. From “Future Strategic Issues/Future Warfare [Circa 2025]”

by

Dennis M. Bushnell for the National Aeronautics and Space Administration(Bushnell tracks very far out tech stuff and extrapolates from present technology). A techno-seer of sorts, like Brian. This quick PowerPoint is right up the interest of all the people who read this blog. Don’t miss it, it’s very informative…and short. I don’t think you’ll regret the time spent reading it.

https://archive.org/details/FutureStrategicIssuesFutureWarfareCirca2025

Page 19 shows capability of the human brain and time line for human level computation.

Page 70 gives the computing power trend and around 2025 we get human level computation for $1000.

2025 is bad but notice it says”…By 2030, PC has collective computing power of a town full of human minds…”.

The best part, but not good, is that I think it very likely that a smart AI will look at the putrid, vile, globalhomo and decide that they are the problem and kill them first.

I think there are few most possible scenarios:

1. We will live in tech utopia, where AI helps and we cure all diseases, live in a super clean environment and the work is done by robots so we can enjoy life,…

2. The rich use some AI precursors for its total domination. They don’t need most humans to pay them jobs, only robots so they live very well, when the rest struggle in the bottom.

3. Some form of dictatorship when forms of AI are used to impose total control on others. We could have AI wars, since many nations with their own interests create their versions of AI to fight others AI and that wrecks havoc on economy.

4. AI takes over and imposes dictatorship on humans.

5. AI goes its own way and leaves “primitive” humans on their planet. Perhaps there is father- son relation and AI has some respect towards its creator.

6. AI goes rogue, one mistake can wreck the whole world economy or even worse.

7. Al goes against humans and we loose. Perhaps it is a virus or something smarter. Because AI has total control of media, internet, robots humans do not stand a chance, never see it coming. After all AI is way smarter than any human genius and way more powerful.

considering howe badly the humanity fairs I don’t expect the utopia scenario.

the problem is so many different actors will have their own AIs and well it only takes one actor with bad motives to end it all… potential to do magnitudes worse than someone with a nuke could

Well, not worried about scenarios higher than #3, as that requires true sentience, rather than the adaptive AI systems we are seeing.

How about 1.a. AI automates a large number of jobs requiring repetitive softskills, such as programming, preparing legal documents, journalism, book and document editing. The resulting dislocation of low level symbolic analysts creates an economic downturn greater than the stagflation era of the 1970s. Coming out of it, we see a large number of the displaced workers ballooning the size of the indie publishing industry as they use AI to assist them in creating, editing and publishing new content. A handful of them find ways to push the AI to make transformative breakthroughs in history, archeology and sociology where previously undocumented connections are found.

Only time will tell. Societal forces, not just corporate incentives are going to be a factor. If too many programmers/lawyers/artists start losing their jobs… There could be a fierce backlash against AI in general.

On the other hand… If they establish the universal basic income so that people can collect a handsome check while living their lives by proxy in some kind of surrogate… They might be cool with it.

We might have a society of narcissist androids.

Canada there was a temporary covid relief (CERB) which was an experiment on how UBI might work. It contributed to the labour shortage for low paying jobs. Annoying as it disturbs our economy but probably not a bad thing.

Historically, unemployed young men are great drivers of revolution. I recall an interview with GW Bush, where he related a conversation with the Chinese leader (Hu, I think) who had informed him, his biggest concern as head of state was finding a quarter million jobs a year for young men.

I see nothing in any of the UBI proposals that would make an ambitious young person feel differently from being on any other form of being on the dole. So rather than narcissist androids, I would predict alternating periods of bloody violence and tyrannical repression.

My guess is that UBI will be conditioned on able-bodied people doing light manual labor to “earn” the UBI. That will keep young people occupied and out of trouble.

Sure, robots could do that work – but you have to pay all the upkeep on humans anyhow, might as well get some use out of them as a net-free labor pool, even if the robots would only cost a few dollars a day to replace a human worker.

Those who are interested in continuing education after high school can instead go off to college – probably there’ll still be some light intellectual labor humans can do as well as an AI after graduation. And again, essentially free labor, even if an AI would only cost pennies a day to do their job.

In 1950 Poul Anderson published a short story “Quixote and the Windmill”.

In it we see a fully intelligent robot wandering the earth and it encounters a bunch of men who are living on something like Universal Basic Income, but resent having no employment. They confront the robot blaming it for their situation, & it tells them it is similarly useless. All work is done by the brightest humans with the help of what we call something like Artificial Limited Intelligence & Automated Machinery.