There are six major AI projects in the race beyond 2 trillion parameter models. There is OpenAI, Anthropic, Google/Deepmind, Meta, a UK government project and a stealth project.

It takes $1-2 billion per year of resources to be in the game. There is $1 billion needed for hardware and that hardware will need to be updated every 2-3 years. There is a need for hundreds of AI specialists and staff and some of the lead people will need $1-2 million and stock options.

There are over a hundred other projects and some of those could step up and impact the race.

GPT-5 should be finished by the of 2023 and released early in 2024. It should have 2-5 trillion parameters.

Anthropic plans to build a model called Claude-Next which should be 10X more capable than today’s most powerful AI. Anthropic has already raised $1 billion and will raise another $5 billion and will spend $1 Billion over the next 18 months. Anthropic estimates its frontier model will require on the order of 10^25 FLOPs, or floating point operations. This is several orders of magnitude larger than even the biggest models today. Anthropic relies on clusters with “tens of thousands of GPUs.” Google is one of the Anthropic funders.

Google and Deepmind are working together to develop a GPT-4 competitor called Gemini. The Gemini project is said to have begun in recent weeks after Google’s Bard failed to keep up with ChatGPT. Gemini will be a large language model that will have a trillion(s) parameters like GPT-4 or GPT-5. They will be using tens of thousands of Google’s TPU AI chips for training. It could take months to complete. Whether Gemini will be multimodal is unknown.

Deepmind has also developed the web-enabled chatbot Sparrow, which is optimized for security similar to ChatGPT. The DeepMind researchers find that Sparrow’s citations are helpful and accurate 78% of the time. DeepMind’s Chinchilla is one of today’s leading LLMs and it was trained on 1.4 trillion tokens.

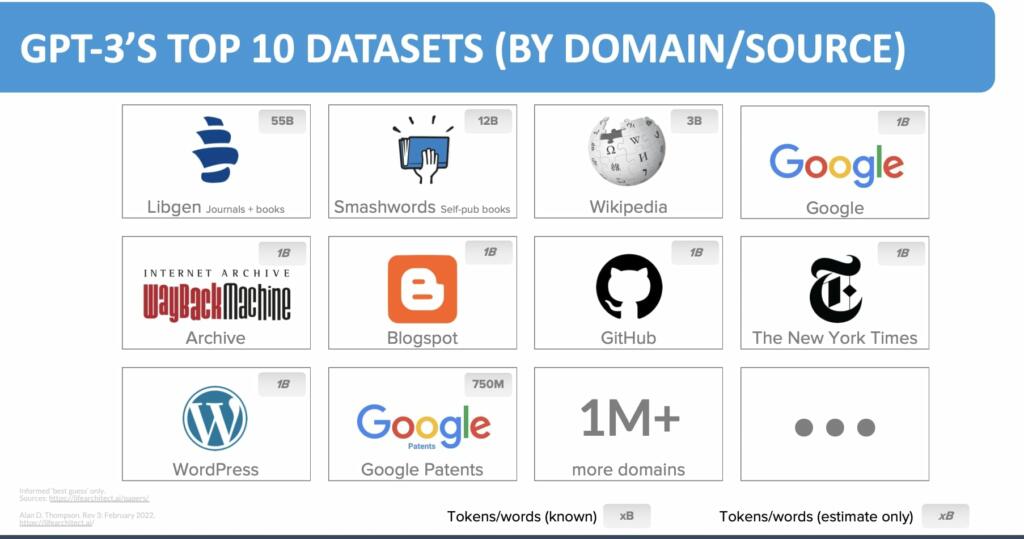

The world’s total usable text data is between 4.6 trillion and 17.2 trillion tokens. This includes all the world’s books, all scientific papers, all news articles, all of Wikipedia, all publicly available code, and much of the rest of the internet, filtered for quality (e.g., webpages, blogs, social media).

There have been emergent capabilities as the larger models have been developed.

There will be the compute power, algorithm improvement to scale models by a thousand to a million times over the next 6 years. Nvidia’s CEO predicted AI models one million times more powerful than ChatGPT within 10 years.

Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology.

Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels.

A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.

I’ve read tensor math is much better for neuron simulation. Are tensor capable processors expensive enough that it makes sense to use GPUs? I suppose power consumption would be lower with tensor processors on a Watt per neuron basis.

The Chinese must be thinking already about something 10x bigger, the Chinese bigger and better mentality.

This are incredible and very scary times for all of us, I think, Whew!

How people will use/abuse AI:

Someone Asked an Autonomous AI to ‘Destroy Humanity’: This Is What Happened:

https://www.vice.com/en/article/93kw7p/someone-asked-an-autonomous-ai-to-destroy-humanity-this-is-what-happened

Things are changing very fast. Top players know, that if they fall behind, they loose a lot, perhaps it is even existential threat for their companies. There is a lot money to be made, primary motivation is still existential threat. A few billions per year shouldn’t be a problem for such large companies,.. Microsoft has the lead and since they own github, they have access to all the code from millions of developers. I don’t need to mention Open-Al.