Alan Thompson is an AI expert who is closely tracking progress to AGI.

His definition is:

Artificial general intelligence (AGI) is a machine capable of understanding the world as well as—or better than—any human, in practically every field, including the ability to interact with the world via physical embodiment.

And the short version: ‘AGI is a machine which is as good or better than a human in every aspect’

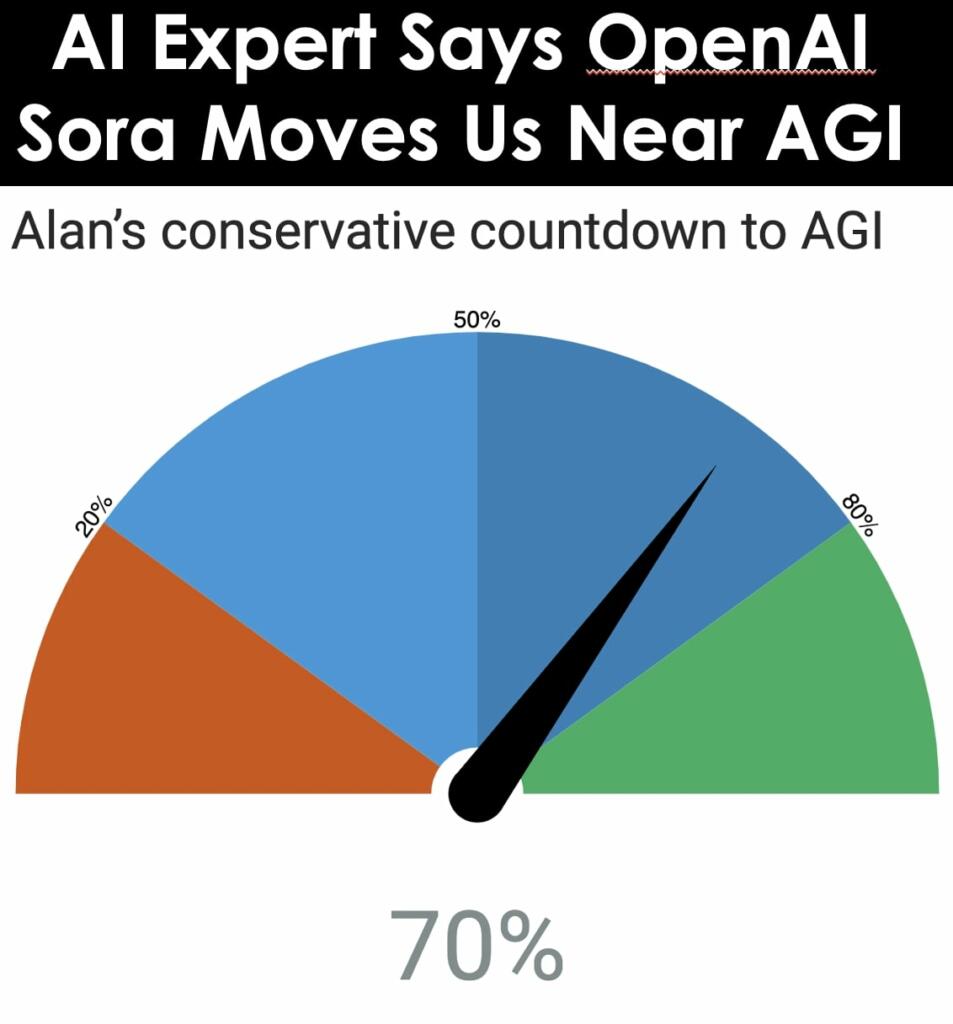

By his scoring we were at 39% December 2022, 61% December 2023 and now 70%.

After @GoogleDeepMind release of Gemini 1.5 and @OpenAI's SORA, lets update our predictions based on @dralandthompson's conservative countdown to #AGI …. now it's a month early. This end of year is going to be sooo interesting pic.twitter.com/9sfrNpOBZk

— Dennis Xiloj (@denjohx) February 27, 2024

End of year update on #AGI, as usual using data from @dralandthompson conservative countdown to AGI. Are we accelerating? last one predicted april, now its january. pic.twitter.com/On5cYSLEgq

— Dennis Xiloj (@denjohx) December 26, 2023

He says a score of round 80%: AI in a robot passes Steve Wozniak’s test of AGI: can walk into a strange house, navigate available tools, and make a cup of coffee from scratch.

Powered by Carbon, Phoenix is now autonomously completing simple tasks at human-equivalent speed. This is an important step on the journey to full autonomy. Phoenix is unique among humanoids in its speed, precision, and strength, all critical for industrial applications. pic.twitter.com/bYlsKBYw3i

— Geordie Rose (@realgeordierose) February 28, 2024

OpenAI is building its AI into humanoid robots.

The company just invested in Figure and signed a collaboration agreement to develop next-generation AI models for robots.

Embodied AI is coming in 2024.

But there's more:

Figure also raised $675M at a whopping $2.6B valuation… https://t.co/Cml3YbjkUw pic.twitter.com/QXUGSMbqgC

— Rowan Cheung (@rowancheung) February 29, 2024

Musk Shows Off Major Teslabot Improvements! pic.twitter.com/r2GNEipfQD

— DrKnowItAll (@DrKnowItAll16) February 28, 2024

The OpenAI report on the Sora complete text, image and video AI is here.

70%: OpenAI Sora (‘sky’). Text-to-video diffusion transformer that can ‘understand and simulate the physical world in motion… solve problems that require real-world interaction.’

Two additional considerations:

This is not just a text-to-video model.

It is text-to-video,

image-to-video,

text+image-to-video,

video-to-video,

video+video-to-video ,

text+video-to-video,

we can also use Sora to edit a video from a text instruction using SDEdit. "rewrite the video in a pixel art style." pic.twitter.com/sMbV2szpZs

— Tim Brooks (@_tim_brooks) February 16, 2024

and text-to-image (sample).

Sora is also capable of generating images at up to 2048×2048 resolution. here is an image with crazy photorealism generated by Sora pic.twitter.com/oSUyEB5Ph7

— Tim Brooks (@_tim_brooks) February 16, 2024

Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology.

Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels.

A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.

It’s been known for 19 years that a necessary but insufficient condition for AGI is to win the Hutter Prize — and the current crop of language models have been consistently failing a vastly simpler test:

Write the shortest program you can that outputs this string:

0000000001000100001100100001010011000111010000100101010010110110001101011100111110000100011001010011101001010110110101111100011001110101101111100111011111011111

Nor is this a “special ability” test — it is at the heart of Solomonoff Induction which is at the heart of finding optimal foundation models.

The fact that everyone who gets attention at places like NextBigFuture ignores this establishes beyond a reasonable doubt that everyone barking up the wrong search tree.

I’m doubtful it will be anywhere near this soon, but if there was a 1% chance that a grand piano might drop on me each time I stepped out the front door of my house, I would start using other doors (and looking up, even so). I buy home insurance based on a much smaller chance of my house being destroyed in any given year.

So I’ll hedge my bets and find a way to profit either way — even if that means not winning quite as big had I bet all one way or the other.

“By his scoring we were at 39% December 2022, 61% December 2023 and now 70%.”

Based on this we should be at about 75% by December 2024?

His definition of AGI has already been inflated into what would have been a definition of ASI. That level of capabilities is unambiguously Superhuman.

Functional AGI is just good enough to operate at a median level in most medium skilled human jobs and tasks without special assistance. Pretty much what we hope of a generic human being while expecting to be frequently disappointed.

For AGI to replace most human workers and justify 10 trillion dollar market caps for companies in the game doesn’t even require that more modest standard.

Yes he inflated the definition of AGI to ASI and then says we are already 70% there!? That is ludicrous.

I guess this series is coming at just the right time:

Apple orders 10 episodes of a Neuromancer TV series:

https://arstechnica.com/culture/2024/02/william-gibsons-neuromancer-will-become-an-apple-tv-show/

Yes he inflated the definition of AGI to ASI and then says we are already 70% there!? That is ludicrous.

I think there is too much marketing involved in concept of “AI” to sell more of it to the masses. For me it is just more advanced machine learning algo. Still far away from true artificial intelligence.

Based the Google ‘Woke’ version I don’t see where this can anywhere but the trash heap. AI appears to be just a bigger, faster Garbage in = Garbage out processor based on the particular agenda of the owner.

Researchers at Princeton University have developed an evaluation framework based on nearly 2,300 common software engineering problems assembled from bug reports and feature requests submitted on GitHub to test the performance of various large language models (LLMs).

The researchers provided different language models with the problem to be solved and the repository code. They then asked the model to produce a feasible fix. This was then tested to ensure that it was correct. But the LLM generated an effective solution in only 4% of cases.

Their specially trained model, SWE-Llama, was only able to solve the simplest engineering problems presented on GitHub, whereas conventional LLMs such as Anthropic’s Claude 2 and OpenAI’s GPT-4 were only able to solve 4.8% and 1.7% of problems, respectively.

The research team concludes: “Software engineering is not simple in practice. Fixing a bug may require navigating a large repository, understanding the interaction between functions in different files or spotting a small error in convoluted code. This goes far beyond code completion tasks.”

Over the last few days, I’ve been trying out the latest version of Gemini, asking it some simple programming questions. Every time, it answers with answers that seem possible but are wrong. So they want us to believe that by further developing these generative intelligences, we’re going to surpass human beings? What nonsense. These so-called intelligences are above all based on probabilities. They just generate answers that seem convincing, but that’s all. There’s no real intelligence behind it. That said, it can be useful if you need to make summaries, translations or generate images. In the artistic field, it certainly has its uses.

I’m convinced that we’re living in a new artificial intelligence bubble, and that all this will soon deflate.

‘AGI is a machine which is as good or better than a human in every aspect’.

There’s a lot of things it won’t be better in 1 year, like walking/running as smoothly as humans, or driving better, let alone doing surgery, haircutting, and so many daily activites… It will eventually but more like in 2035, not next year. We will have partial AGI, not complete AGI.

Working in the physical world is much harder than working with symbols and tokens.

The physical world is well, real, also incredibly complex and detailed in its state memory, unforgiving and gives no second chances in many of its interactions.

A model blundering a small percent of the time on a moving robot could end up killing people or destroying the robot.

Jonathan .. most stuff on yr list agree, but not driving better .. as to AGI no 2035 at the current rate of change minimum 10x per year? that’s 10000000000 times better than now its way to long it could be in 2024-30 prob 2026