The era of Big Data and Big Compute is here. Per OpenAI, compute demand by deep learning has been doubling every three months for the last 8 years. Neuromorphic computing with deep neural networks is driving AI growth however it is heavily dependent on compact, non-volatile energy efficient memories with various attractive features to suit different situations. These include STT/SOTMRAM, SOT-MRAMs, ReRAMs, CB-RAMs, and PCMs.

AI models require a 10 to 100-fold increase in computing power to train models compared to the previous generation, which is, in turn, doubling overall demand about every six months.

Advanced graphics processing unit (GPU) architectures are opening up dramatic new possibilities for designers. The key is choosing the right memory architecture for the task at hand and the right memory to deploy for that architecture.

There is an array of more efficient emerging memories out there for specific tasks. They include compute-in-memory SRAM (CIM), STT-MRAM, SOT-MRAM, ReRAM, CB-RAM, and PCM. While each has different properties, as a collective unit they serve to enhance compute power while raising energy efficiency and reducing cost. These are key factors that must be considered to develop economical and sustainable AI SoCs.

Neuromorphic computing relies on new architectures, new memory technologies and more efficient than current processing architectures, and it requires compute-in-memory and near memory computing as well as the expertise in memory yield, test, reliability and implementation.

Neuromorphic computing-based machine learning utilizes techniques of Spiking Neural Networks (SNN), Deep Neural Networks (DNN) and Restricted Boltzmann Machines (RBM). Combined with Big Data, “Big Compute” is utilizing statistically based High-Dimensional Computing (HDC) that operates on patterns, supports reasoning built on associative memory and on continuous learning to mimic human memory learning and retention sequences.

Emerging memories range from “Compute-In-memory SRAMs” (CIM), STT-MRAMs, SOT-MRAMs, ReRAMs, CB-RAMs, and PCMs. The development of each type is simultaneously trying to enable a transformation in computation for AI. Together, they are advancing the scale of computational capabilities, energy efficiency, density, and cost.

Designers balance the requirements of their designs as they determine which of the nine major challenges are most critical at a given time:

1. Throughput as a function of energy (peta-ops per watt)

2. Modularity and scalability for design reuse

3. Thermal management to lower costs, complexity, and size

4. Speed supporting real time AI based decision making

5. Reliability especially for human life sensitive applications

6. Processing compatibility with CMOS for components constituting a system. As an example, STT-MRAM can be easily integrated

with a CMOS based processor.

7. Power delivery

8. Cost; best expressed in the “sweet spot” node for a function and with integration (packaging) cost

9. Exhibiting analog behavior mimicking human neurons

Each of these memory challenges can be addressed in multiple ways, as usually there is more than one alternative for the same objective. Each alternative will have pros and cons, including further scalability implications for architectural decisions. For example, designers must choose between using SRAMs or a ReRAM array for compute-in-memory. The power and scalability implications of these two options are at extreme opposites. The SRAM option is the right choice when the size of the memory block is relatively small, the required speed of execution is high, and the integration of the in-memory compute within an SoC comes naturally as the most logical option (although SRAM is costly in area and in power consumption – both dynamic and leakage). On the other hand, a highly parallelized matrix multiplication typical of deep neural networks requiring a huge amount of memory makes the argument for using ReRAM, because of the density advantages.

1. SRAM and ReRAM: A choice between two extremes for compute-in-memory

For efficient compute-in-memory chips, designers must opt for either SRAM or ReRAM, which are opposites in terms of power and scalability. SRAM is recommended for small memory blocks and high-speed requirements but comes associated with drawbacks such as its substantial area and increased power consumption. For projects with significant memory requirements, ReRAM offers an advantage owing to its density. ReRAM also offers the additional benefit of analog behavior when needed. To be clear, most (if not all) emerging memories can be deployed for compute-in-memory, but SRAM is the best choice for performance and ReRAM is the best choice for density and energy efficiency.

2. MRAM: Low-power revolution

MRAM — specifically STT-MRAM and SOT-MRAM — is a non-volatile, ultra-low-power memory option fully compatible with CMOS processing. Where MRAM was traditionally slow and difficult to scale, STT-MRAM changed the game with a variable current inducing a “spin orbit torque.” STT-MRAM offers fast write times as low as 1ns and is widely used in IoT applications. SOT-MRAM is a variation that allows for even faster read and write times with identical functionality (though that comes at the price of a larger area).

Shared features include low leakage, scalability, high retention time, and high durability, as well as ease of integration with CMOS.

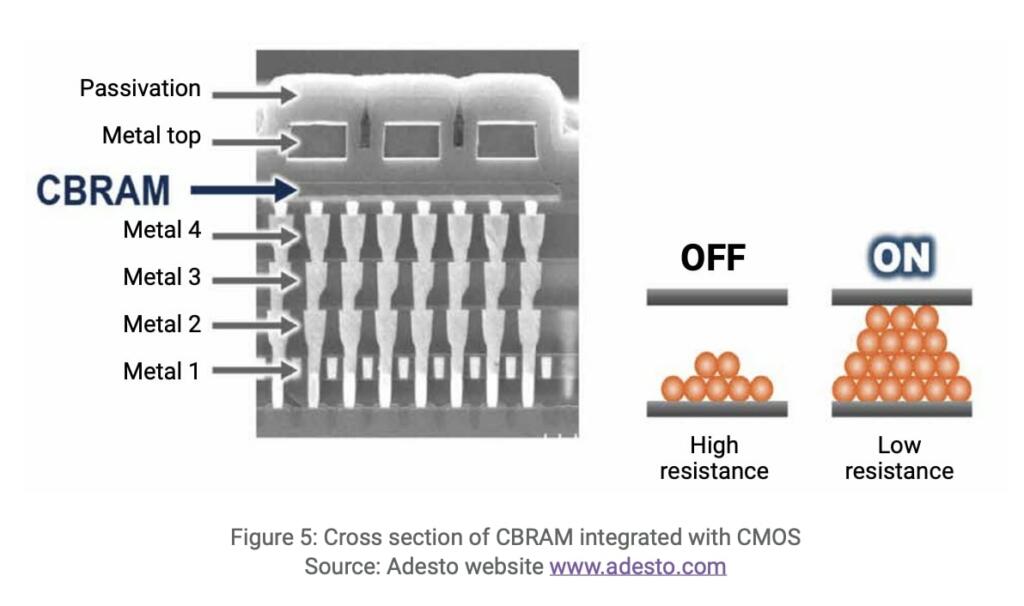

3. PCM, ReRAM, CB-RAM: Cost-effective storage and connectivity

These memory types fall into the category of phase-change memories. They are non-volatile with two distinct states of low and high resistance based on the direction of the current applied between the two electrodes forming the memory. Non-volatile memories such as PCM, ReRAM, and CB-RAM are ideal for storing analog data such as video, audio, and images, negating the need for expensive digital-to-analog converters. A further benefit is in training neural networks, as phase-change memories enforce the connectivity of a synapse. All are easily integrated with CMOS and in 3D stacking. ReRAM cross-bar arrays are best for in-memory computing. And CB-RAM is ideal for realizing in-memory computing and in the implementation of neural networks.

While no single memory type is the silver bullet for all AI chips, each has its advantages in terms of the space it takes up, capacity, retention, cost, ability to stack, endurance, and more. Each memory challenge can be addressed in multiple ways, with more than one suitable alternative that can be considered to meet the same objectives. Designers need to weigh both pros and cons for each alternative, including further scalability implications for architectural decisions.

Neuromorphic Computing vs. Traditional SRAM

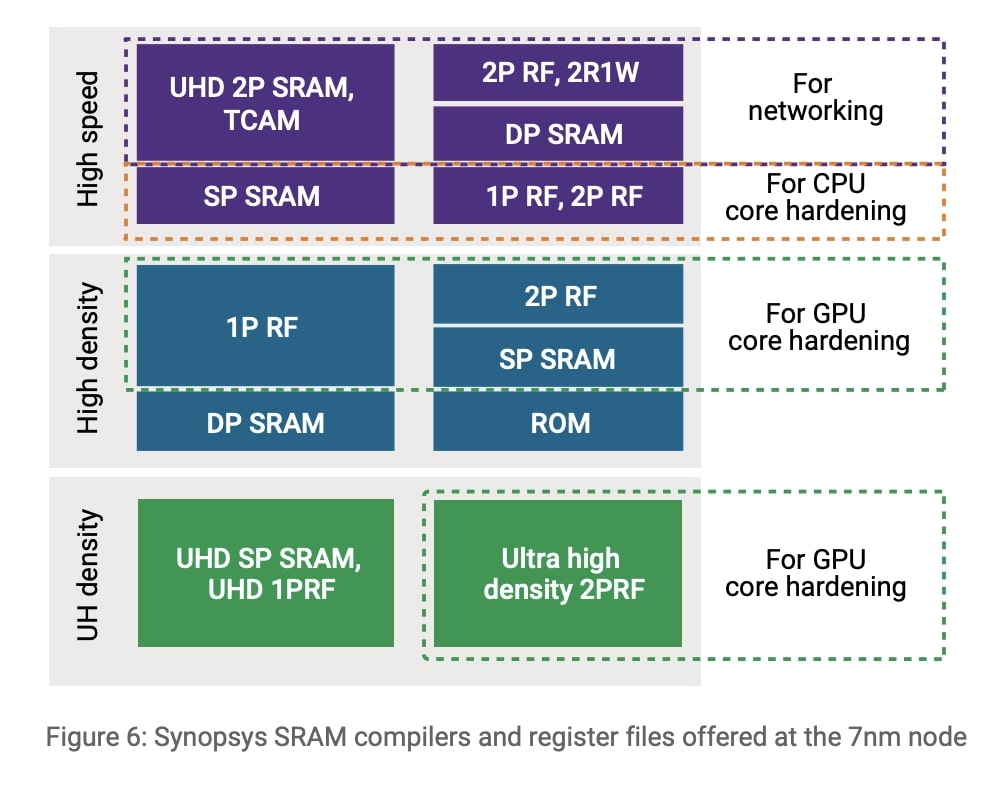

The emerging non-volatile memories discussed above represent the foundation of neuromorphic computing and lend themselves to non-Von Neumann architectures. While they offer exciting potential, classical SRAM memories remain important.

With unequaled latency, SRAM is still the backbone of AI and machine learning (ML) architectures for neuromorphic computing. Multi-port memories enable parallelism to accommodate CIM and near-memory computing. Synopsys supports CIM research and sees near-memory computing as the most energy-efficient, versatile form of computing. We offer a family of multi-port SRAMs that are energy efficient and can operate at ultra-low voltages.

Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology.

Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels.

A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.

Something not addressed here is that ReRAM covers a wide range of possible device configurations of resistive (memristive) memory.

One of these is a hybrid multi gate device called a memtransistor that has a high gate density per device to the extent that it could act as a single neuron with the gates acting as synapses.

These would be an ideal foundation from which to build an ultra dense artificial brain.

OK, I’m trying to be polite here, but I’m having a problem w/so called “big data.” Just because one has a enough “data” does not mean people know what their talking about. If they would make anything of this stuff, but of course, it’s never THAT SIMPLE. Oh how I wished the world was gentle, and kind, and so very decent, But you know what? So very often, it ain’t.

But each of us has the nature to create the future every day we live today. We create the future by what we do today. I BELIEVE in what every person can do. We need to give every person the ability to imagine things that they can’t yet do.

And with our help, would you not say that was that be so God damn wonderful? Works for me, us boys and girls…