The current best large language models that you can install on your computers are GPT4ALL and the Cerebus open-source models.

There are several videos that guide you through the installation of the models.

Some of the open-source AI models have all of the code in one place and others require you to put the pieces (model, code, weight data) together.

gpt4all: an ecosystem of open-source chatbots trained on a massive collection of clean assistant data including code, stories and dialogue.

Finetuning the models requires getting a highend GPU or FPGA.

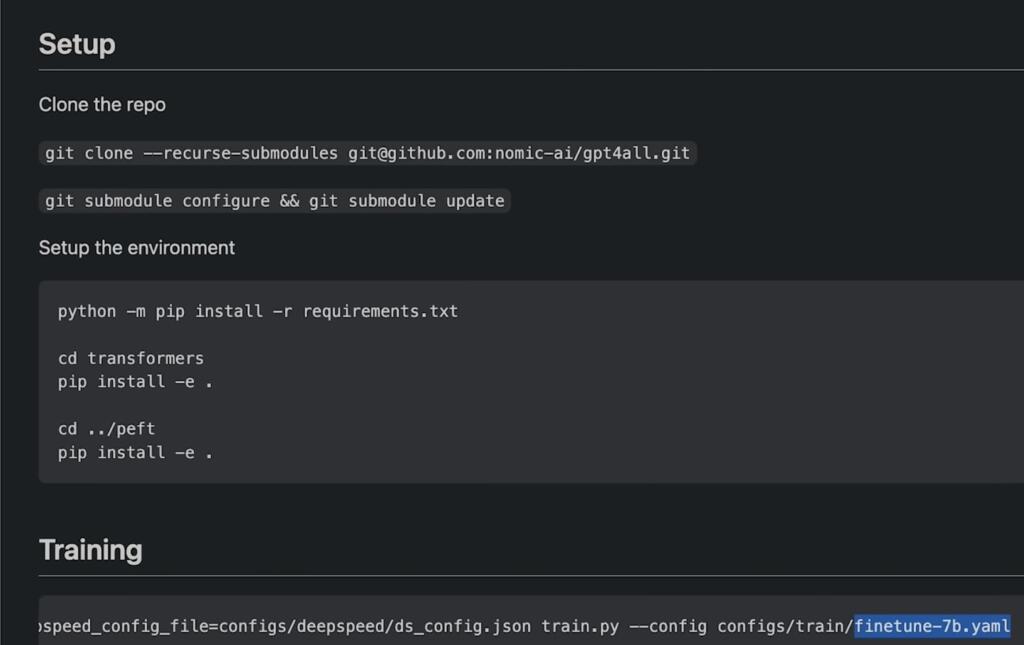

Nomic AI is furthering the open-source LLM mission and created GPT4ALL. GPT4ALL is trained using the same technique as Alpaca, which is an assistant-style large language model with ~800k GPT-3.5-Turbo Generations based on LLaMa. It works better than Alpaca and is fast. It is like having ChatGPT 3.5 on your local computer. Nomic AI includes the weights in addition to the quantized model.

Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology.

Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels.

A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.

Consumer AI missions seem to be a task for the cloud unless you are planning something nefarious, proprietary, or patentable. No matter how much you upgrade a personal computer the cloud has a thousand to a million times more horsepower. So how much would you pay to answer the question, “What foods reverse heart disease?”

Advanced models can be used to train simpler ones and make them better, with less parameters.

This is very dramatic with GPT-3/4 trained variants of LLaMa, for example.

Therefore the big guys will end up benefiting the little guys (whether they like it or not), even if they are more limited.

It won’t be long before local LLMs can be chain-of-reasoning tool-users, and then all bets are off for local AI capabilities. They can be even hybrid, out-sourcing the toughest questions to the cloud models as well as tool users directly.

Yeah, I’m fine with using something online, as long as it’s free. Cloud resources are more powerful than local resources, so it’s better to use a cloud version. Does anyone know of anything as good as GPT4 that’s available online for free?

I particularly respect Google Bard. As far as computing power and linguistic modeling go, it appears as advanced as GPT-4, or very nearly so. It has one distinct advantage — real-time access to the Internet, so it’s source materials are up-to-date.

The online models are optimized for specific purposes, like being helpful, value neutral information bots. Trying to get them to serve legitimate functions outside of those purposes is difficult.

If you have a local one, you can fine tune it for your specific needs. It could, for instance, provide decidedly non-neutral, judgmental commentary on clothing choices to help you decide what to wear on a date. Or you could build it into functions that don’t explicitly appear to be text based (but are under the hood), controlling video game characters or simple machinery.

If all legitimate uses became available through the cloud with zero lag and zero cost, then yeah, no need to run your own copy.

Runs fine with llama.cpp on a 2 years old Intel laptop.

But I’ve heard 13B Vicuna is even better as ChatGPT replacement running locally. Haven’t tried it yet.

Considering where all this is going, huge matrix multiplication extensions will be run-of-the-mill in all processors soon, beyond what AVX and AVX2 do. Intel and AMD would be negligent if they didn’t add them.

Hi Jean,

I sent you an email. I would like to discuss this with you.

Hi Brian,

Any interest in a new “take” re. Hypothyroidism?

If not, please forgive.

If yes, email [email protected].

Sorry to bug you!

G.A.Harry, MD.

“…Runs fine with llama.cpp on a 2 years old Intel laptop…”

Which one? llama-models-7-13-30? I’m assuming 7??? How much ram do you have?

There’s a copy of llama-models-7-13-30 available as a magnet/torrent file on I2P.

LLaMA neural network models (7b, 13b, 30b).torrent

Description: Meta’s neural network models. The distribution includes the project alpaca.cpp (source code), which is easy to compile to interact with the language model.

magnet:?xt=urn:btih:842503567bfa5988bcfb3a8f0b9a0375ba257f20&tr=http://tracker2.postman.i2p/announce.php

magnet:?xt=urn:btih:842503567bfa5988bcfb3a8f0b9a0375ba257f20&dn=LLaMA+neural+network+models+%287b%2C+13b%2C+30b%29&tr=http://tracker2.postman.i2p/announce.php

You can get this with the torrent downloader BiglyBT. It has a plug-in for I2P. Install, then install the I2P plug-in. You can then in the options turn OFF all public torrent access. It will only share and download from the Invisible Internet Project I2P.