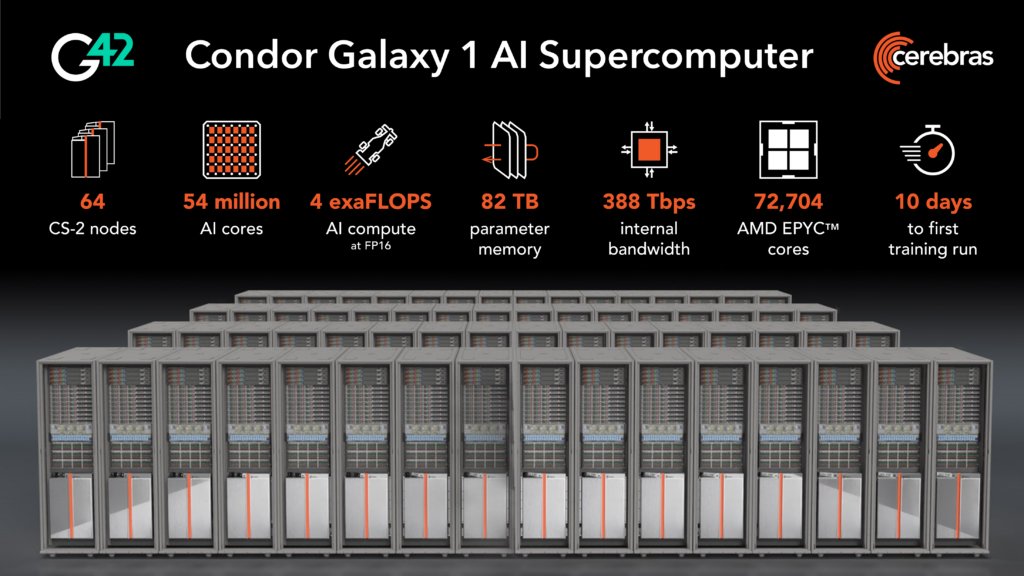

Cerebras Systems and G42, the UAE-based technology holding group, announced Condor Galaxy, a network of nine interconnected supercomputers, offering a new approach to AI compute that promises to significantly reduce AI model training time. The first AI supercomputer on this network, Condor Galaxy 1 (CG-1), has 4 exaFLOPs and 54 million cores. Cerebras and G42 are planning to deploy two more such supercomputers, CG-2 and CG-3, in the U.S. in early 2024. With a planned capacity of 36 exaFLOPs in total, this unprecedented supercomputing network will revolutionize the advancement of AI globally.

CG-1 links 64 Cerebras CS-2 systems together into a single, easy-to-use AI supercomputer, with an AI training capacity of 4 exaFLOPs. Cerebras and G42 offer CG-1 as a cloud service, allowing customers to enjoy the performance of an AI supercomputer without having to manage or distribute models over physical systems.

Cerebras CS-2 costs several million dollars. The pricing of the CS-2 wafer chip supercomputer is not disclosed. G42 has $800 million in funding. If the CS-2 cost $10 million then 64 of them would cost $640 million. If the CS-2 cost $5 million each then 64 of them would cost $320 million. I would guess that the CG-1 needs $200-400 million of funding.

A 64 core AMD Epyc costs about $8000. 1000 of those would cost $8 million.

CG-1 is the first time Cerebras has partnered not only to build a dedicated AI supercomputer but also to manage and operate it. CG-1 is designed to enable G42 and its cloud customers to train large, ground-breaking models quickly and easily, thereby accelerating innovation. The Cerebras-G42 strategic partnership has already advanced state-of-the-art AI models in Arabic bilingual chat, healthcare and climate studies.

“Delivering 4 exaFLOPs of AI compute at FP 16, CG-1 dramatically reduces AI training timelines while eliminating the pain of distributed compute,” said Andrew Feldman, CEO of Cerebras Systems. “Many cloud companies have announced massive GPU clusters that cost billions of dollars to build, but that are extremely difficult to use. Distributing a single model over thousands of tiny GPUs takes months of time from dozens of people with rare expertise. CG-1 eliminates this challenge. Setting up a generative AI model takes minutes, not months and can be done by a single person. CG-1 is the first of three 4 exaFLOP AI supercomputers to be deployed across the U.S. Over the next year, together with G42, we plan to expand this deployment and stand up a staggering 36 exaFLOPs of efficient, purpose-built AI compute.”

CG-1 is the first of three 4 exaFLOP AI supercomputers (CG-1, CG-2, and CG-3), built and located in the U.S. by Cerebras and G42 in partnership. These three AI supercomputers will be interconnected in a 12 exaFLOP, 162 million core distributed AI supercomputer consisting of 192 Cerebras CS-2s and fed by more than 218,000 high performance AMD EPYC CPU cores. G42 and Cerebras plan to bring online six additional Condor Galaxy supercomputers in 2024, bringing the total compute power to 36 exaFLOPs.

Condor Galaxy 1 (CG-1)

Optimized for Large Language Models and Generative AI, CG-1 delivers 4 exaFLOPs of 16 bit AI compute, with standard support for up to 600 billion parameter models and extendable configurations that support up to 100 trillion parameter models. With 54 million AI-optimized compute cores, 388 terabits per second of fabric bandwidth, and fed by 72,704 AMD EPYC processor cores, unlike any known GPU cluster, CG-1 delivers near-linear performance scaling from 1 to 64 CS-2 systems using simple data parallelism.

“AMD is committed to accelerating AI with cutting edge high-performance computing processors and adaptive computing products as well as through collaborations with innovative companies like Cerebras that share our vision of pervasive AI,” said Forrest Norrod, executive vice president and general manager, Data Center Solutions Business Group, AMD. “Driven by more than 70,000 AMD EPYC processor cores, Cerebras’ Condor Galaxy 1 will make accessible vast computational resources for researchers and enterprises as they push AI forward.”

CG-1 offers native support for training with long sequence lengths, up to 50,000 tokens out of the box, without any special software libraries. Programing CG-1 is done entirely without complex distributed programming languages, meaning even the largest models can be run without weeks or months spent distributing work over thousands of GPUs.

Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology.

Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels.

A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.

Search terms: “sparsity” and “The Hardware Lottery”

The main value of scaling up dense layers is analogous to the main value of decompressing a file to recompress with a better algorithm. Think about a video stream that you convert to voxels representing the static scene through which a camera is moving so that you can then generate a geometric description of the objects therein.

Interesting. Each of these flops are 16 bit, so off we want to compare to Tesla, then we have to multiply by 4. Meaning system will have the capacity of 16 Exa, so their four systems will have an aggregate of 64 Exa. That puts it on par with the Dojo plan. And, the cerebras system does not suffer from any bandwidth problem when training LLMs. It unclear if the Tesla 2024 system will be predominately Dojo 1.0 or 2.0, where the latter can train LLMs efficiently..

Let’s see how this all turns out..!

I will be impressed when we’ll see one of these new multi exaflops systems will finally train 20-100T (brain size) dense AI model.

I want to see if intelligence of the model will increase with scale, and how much.

Many people say and I partially agree that even current cutting edge, largest models are still pretty stupid and don’t really understand anything. This may be true, but to get to human level understanding/reasoning, I think we need to have model with at least 20T, if not 100T parameters. It may be minimum needed to reach AGI, anything bigger than that, may be even ASI.

Some insane capabilities may emerge in such models, like for example ability to invent new things.

Speculation here is, that AGI emerges only post ~20T – 100T params. We’re still at simple animals brain size level with our largest 500B-2T params models (GPT4).

Intelligence emerges from network size and complexity.