The limitations for Tesla AI development are programming resources, AI architecture, compute resources, training data and financial resources.

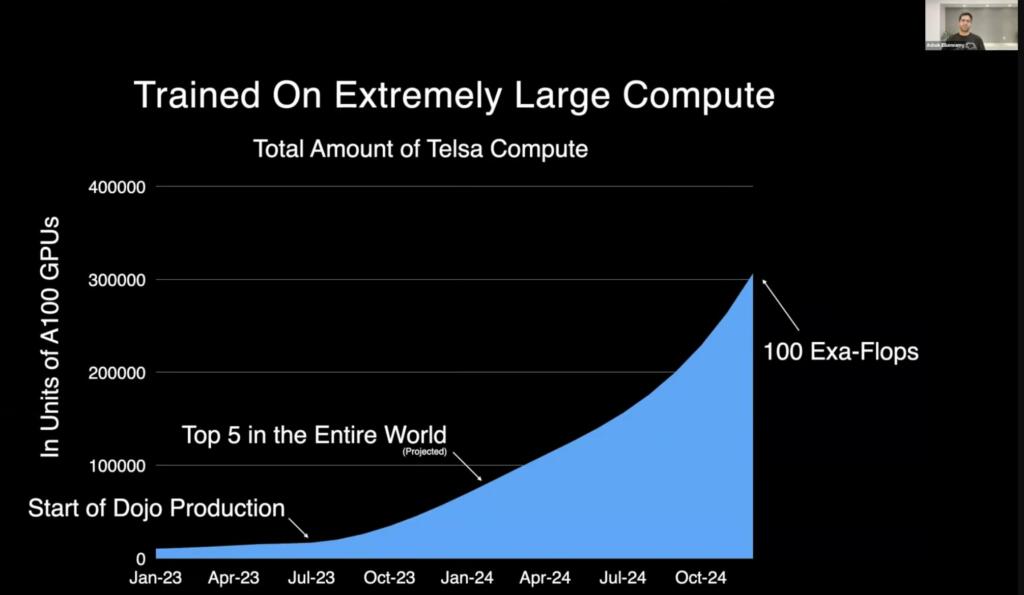

Tesla has started operating its Dojo AI training supercomputer and this will scale to over 100 Exaflops by the end of 2024. Tesla will likely continue to expands its AI supercomputing datacenters and cloud. Tesla continuing its AI computing resource expansion would head to a zettaflop (1000 Exaflops) in another year or two after hitting 100 Exaflops in 2024.

Over a year ago, the Tesla Dojo ExaPod system was to have 120 tiles in ten cabinets and have over 1 exaflops of compute. If these systems were not improved then it would only take 10000 Cabinets to reach 1000 Exaflops.

354 computing cores per D1 chip

25 D1 chips per Training Tile (8,850 cores)

6 Training Tiles per System Tray (53,100 cores, along with host interface hardware)

2 System Trays per Cabinet (106,200 cores, 300 D1 chips)

10 Cabinets per ExaPOD (1,062,000 cores, 3,000 D1 chips)

Each tray consists of six training tiles. Each 135kg tray offers 54 petaflops (BF16/CFP8) and requires 100kW+ of power. An Exapod, would ned 2 MW of energy. 100 Exaflops would need 200 WW of energy.

The Tesla AI team gave technical talks which give strong indications that FSD is close and strong progress is being made on Teslabot. The rate of improvement with a more solid software foundation, more programmers, more data and more compute should enable 10X-100X improvements if various aspects of AI development.

The Tesla physical world foundational model figured out three dimensions on its own. The main driver where OpenAI achieved its surprising breakthrough of ChatGPT by massively expanding the compute resources and training data. If Tesla has a foundational world model that has parallels to the large language models then Tesla expanding compute resources by 100-1000 times could provide the AI breakthroughs that they need for exceptional FSD (full self driving) and Teslabot capabilities.

Tesla is hiring many developers and has billions of dollars of annual research budget.

Tesla has 4.5 million cars gathering data. Tesla will have over 10 million cars gathering data by 2025 and 20 million by the end of 2026 and over 100 million by 2030. If Tesla starts mass producing the Teslabot, they could make 50 Teslabot for the cost of each Model Y.

Tesla is making General foundational AI which is unifying the FSD and Teslabot AI neural networks.

Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology.

Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels.

A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.

The question is those 100 exaflop would be Dojo 2.0 or Dojo 1.X?

Elon has stated that the current Dojo architecture is limited in what it is suited to calculate, but that those limitations will be removed with Dojo 2.0. Think about X.AI… If that LLM would need more standard calculations rather than what the Dojo 1.0 can produce, then the success of X.AI would hinge on getting to Dojo 2.0

Note that these 100 exaflops with 8 bit precision are 64 times less potent than the standard – at least for scientific computing – 64 bit calculations. This means that the Dojo computer would be equivalent to about 1.5 exaflop of scientific computing

Tesla FSD means you need a human with insurance behind the wheel ,a hundred times better than that is still a human with insurance.

Tesla FSD costs $15,000 and when you trade it in the next month it is valued at $800.

There is also a feedback loop between the various arguments and paths by which Tesla/Musk generate vast amounts of new Capital – for example the numbers for FSD transitioning to Tesla Network Robotaxi – and this new area of technology investment.

Even with Tesla building out GFs and scaling other plans like Starship, Starlink, there would have been more Capital than needed. The prospect of Tesla/Musk pouring tens of billions into the expansion of AI Cloud computing absorbs it. Any excess AI compute at a given time would likely have a eager market – so there’s nothing in way of scaling it as fast as possible.