John Gibb and Dr Scott Walker talk about what the OpenAI Q Star is. The classic A* algorithm maybe the basis for Artificially Intelligence Super Agents. They discuss potentially game changing Q* algorithm that OpenAI might have tweaked and made into a first Artificially Intelligent Agent.

A* (pronounced “A-star”) is a graph traversal and path search algorithm, which is used in many fields of computer science due to its completeness, optimality, and optimal efficiency. One major practical drawback is its space complexity, as it stores all generated nodes in memory. Thus, in practical travel-routing systems, it is generally outperformed by algorithms that can pre-process the graph to attain better performance, as well as memory-bounded approaches; however, A* is still the best solution in many cases.

It can be seen as an extension of Dijkstra’s algorithm. A* achieves better performance by using heuristics to guide its search.

Compared to Dijkstra’s algorithm, the A* algorithm only finds the shortest path from a specified source to a specified goal, and not the shortest-path tree from a specified source to all possible goals. This is a necessary trade-off for using a specific-goal-directed heuristic. For Dijkstra’s algorithm, since the entire shortest-path tree is generated, every node is a goal, and there can be no specific-goal-directed heuristic.

A* Search Without Expansions: Learning Heuristic Functions with Deep Q-Networks (2023)

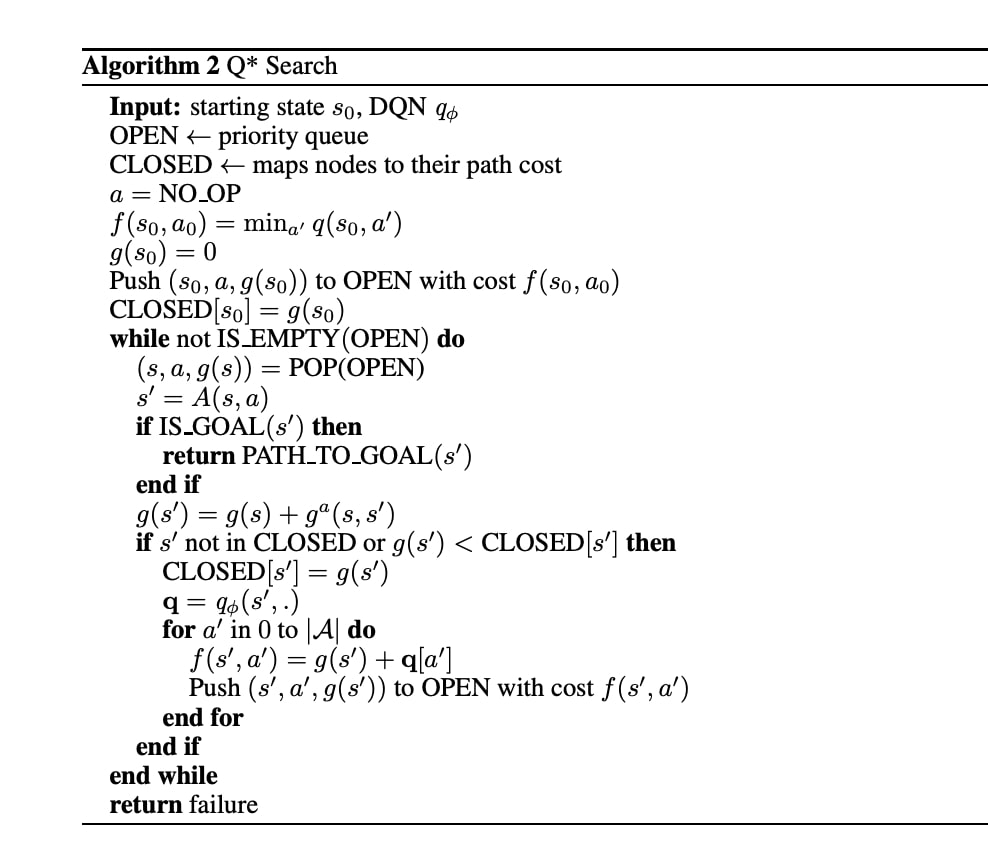

Efficiently solving problems with large action spaces using A* search has been of importance to the artificial intelligence community for decades. This is because the computation and memory requirements of A* search grow linearly with the size of the action space. This burden becomes even more apparent when A* search uses a heuristic function learned by computationally expensive function approximators, such as deep neural networks. To address this problem, we introduce Q* search, a search algorithm that uses deep Q-networks to guide search in order to take advantage of the fact that the sum of the transition costs and heuristic values of the children of a node can be computed with a single forward pass through a deep Q-network without explicitly generating those children. This significantly reduces computation time and requires only one node to be generated per iteration. We use Q* search to solve the Rubik’s cube when formulated with a large action space that includes 1872 meta-actions and find that this 157-fold increase in the size of the action space incurs less than a 4-fold increase in computation time and less than a 3-fold increase in number of nodes generated when performing Q* search. Furthermore, Q* search is up to 129 times faster and generates up to 1288 times fewer nodes than A* search. Finally, although obtaining admissible heuristic functions from deep neural networks is an ongoing area of research, we prove that Q* search is guaranteed to find a shortest path given a heuristic function that neither overestimates the cost of a shortest path nor underestimates the transition cost.

Conclusion

Efficiently solving search problems with large action spaces has been of importance to the artificial intelligence community for decades. Q* search uses a DQN to eliminate the majority of the computational and memory burden associated with large action spaces by generating only one node per iteration and requiring only one application of the heuristic function per iteration. When compared to A* search, Q* search is up to 129 times faster and generates up to 1288 times fewer nodes. When increasing the size of the action space by 157 times, Q* search only takes 3.7 times as long and generates only 2.3 times more nodes. The ability that Q* has to efficiently scale up to large action spaces could play a significant role in finding solutions to many important problems with large.

Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology.

Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels.

A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.

“Compared to Dijkstra’s algorithm, the A* algorithm only finds the shortest path from a specified source to a specified goal, and not the shortest-path tree from a specified source to all possible goals”

A* can be used to find multiple goals. For example you can use the heuristic estimate to the nearest destination. There are many papers that augment A* to find multiple destinations.

“the sum of the transition costs and heuristic values of the children of a node can be computed with a single forward pass through a deep Q-network”

It sounds like they are doing a preprocess of their network using Landmarks. Please read

“Computing the Shortest Path: A* Search Meets Graph Theory” by the great Andrew Goldberg. (it has nice pictures to make it clear how to speed A* by a factor of up to 100).

“Finally, although obtaining admissible heuristic functions from deep neural networks is an ongoing area of research”

That’s quite an understatement. It’s never going to happen. A deep neural network is going to find a function that is pretty good, on average, at guessing the best path cost. But you can’t guarantee that it will never overestimate a best path cost.

“we prove that Q* search is guaranteed to find a shortest path given a heuristic function that neither overestimates the cost of a shortest path nor underestimates the transition cost.”

It’s trivial to prove you’ll get the shortest path if your function is admissible. But no deep neural network will ever be admissible. So it’s not a very interesting thing to prove.

It sounds like Q* is simply A* with Q learning as the heuristic. In that case, it doesn’t sound much different from the way existing systems work, when they add a little bit of tree search on top of reinforcement learning with deep neural networks. If that’s true, then Q* isn’t a magic key to AGI. It’s just an obvious next step to do, and should give some incremental improvements.