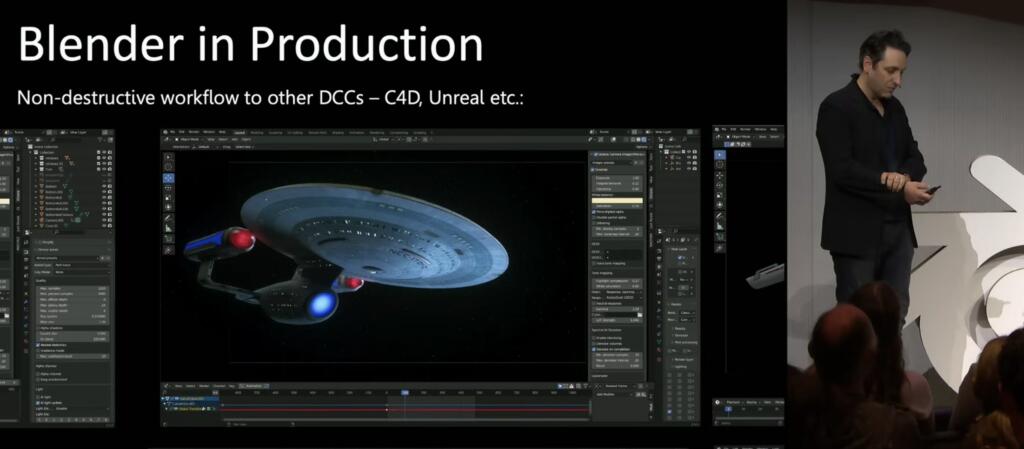

Founder and CEO of OTOY, Jules Urbach, presented a deep dive on next generation rendering – showcasing how Blender, Octane and the Render Network have been used for final frame VFX production in 2022 and 2023.

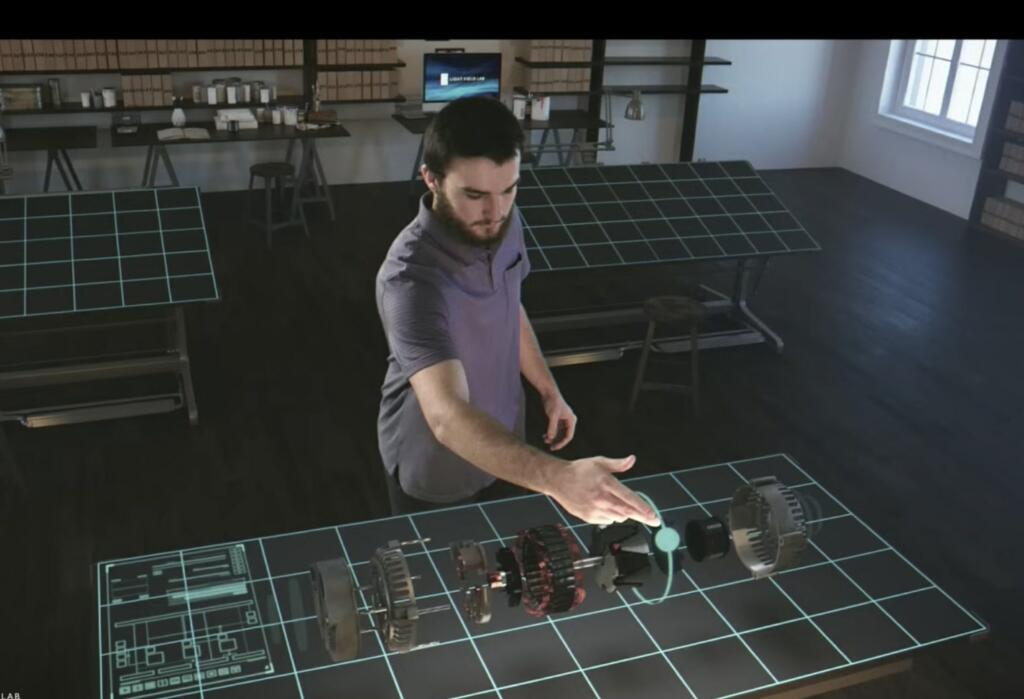

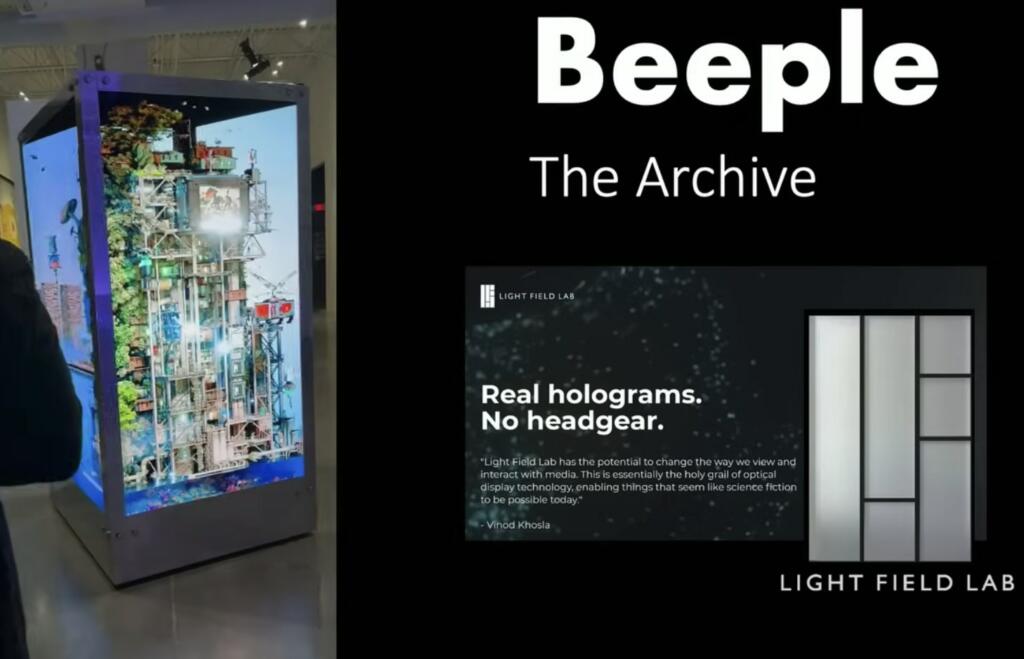

The talk will also highlight how Light Field, NeRF and AI rendering features have advanced the state of the art for virtual production and real time workflows for emerging platforms like Apple’s Vision Pro and Light Field Lab’s solid light holographic displays.

Jules talked about the latest developments in decentralized GPU computing and provenance on the Render Network and open source initiatives for cross DCC rendering – such as ITMF, MSF and MaterialX and OpenUSD.

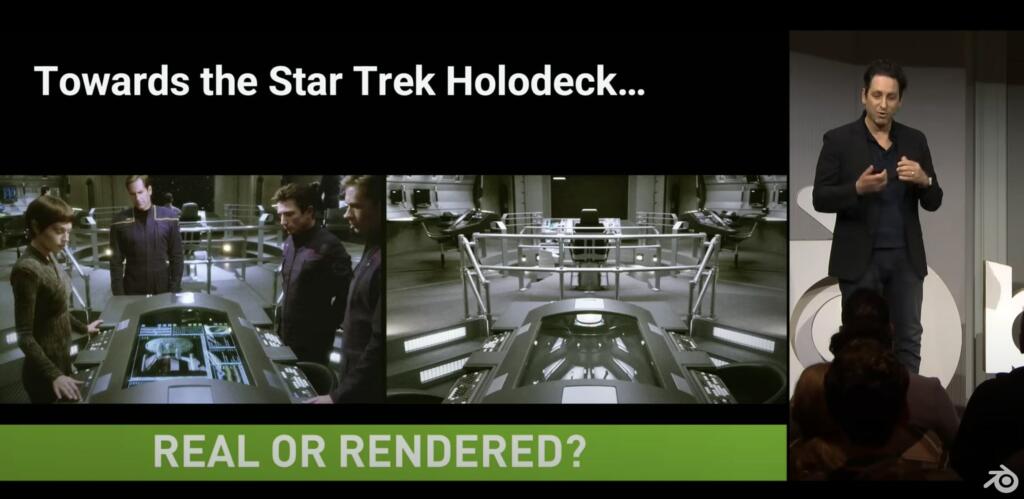

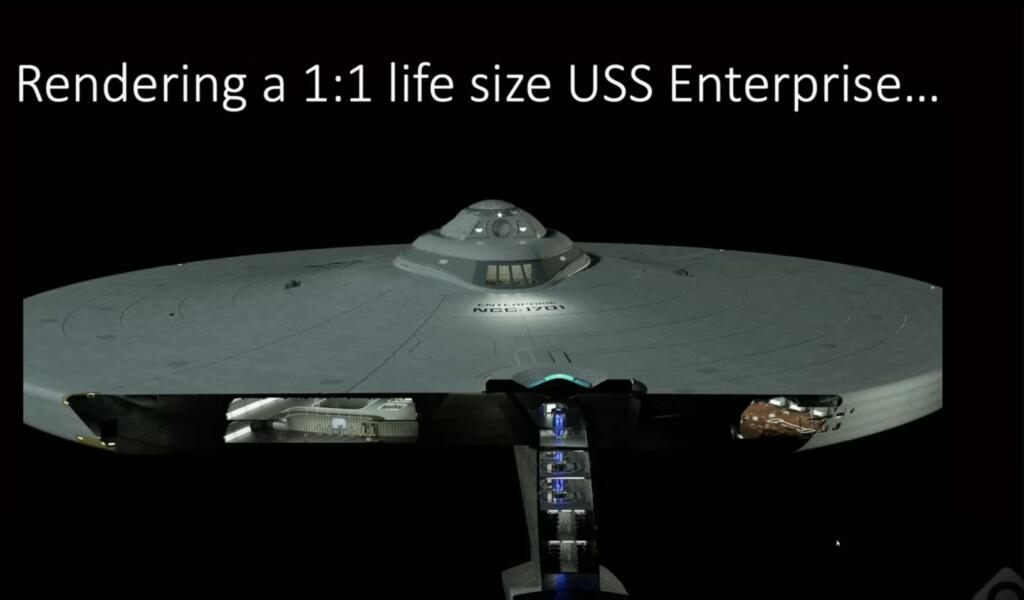

He showcases the Roddenberry Archive immersive experience, a multi-decade initiative to preserve Gene Roddenberry’s lifetime of work – including the full history of Star Trek. The talk will present the work done in Blender to create the life-sized 1:1 Starship Enterprise models and Enterprise Bridge sets reconstructed for viewers to walk through as fully immersive experiences, with narration by William Shatner and other Star Trek luminaries.

“Towards the Star Trek Holodeck: The Future of Rendering” by Jules Urbach.

Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology.

Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels.

A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.

Achieving real-world Star Trek holodecks is a tantalizing prospect that blends cutting-edge technology with the boundless imagination of science fiction. While we’re not quite at the point of conjuring fully immersive, interactive environments with a mere vocal command, significant strides have been made in virtual reality (VR) and augmented reality (AR) technologies.

Heh. As they alluded to in the Star Trek series “Lower Decks,” the janitorial staff is likely to encounter some biological hazards when cleaning holodecks.

It took about 1/2 hour to render each of my renders shown here: https://bit.ly/RAInvestor using Indigo off of a complex 780MB (before compression in the latest version) Sketch-Up model. My Apple iMac was state-of-the-art in 2016, but is less so now. Still, the time to render even a still image is probably not much shorter since the pixel count has increased since then so there’s more data.

I can’t even imagine what it would take to render my related SU fly-through 3-minute video here: https://bit.ly/Riverarch.

Given the expense and extra work beyond the engine involved, I think this will be of use in commercial movies on some upgraded Imax type screen first. Maybe people will start going back to theaters if they can see truly immersive 3D movies, at least until it trickles down to the living room TVs, but I think this is at least a decade away, probably longer. People just don’t have that kind of money on average.

We’ll never get the Star Trek Holodeck like this, however. That’s actually impossible with just the two senses: sight & sound. Even if you ignore taste, at least until you get hungry, or smell, at least until you want to walk through a forest or even a dense city (ugh), the absence of touch will render any experience to VR forever. And the absence of sync to vestibular (balance) and kinesthetic (orientation in space) senses is actually making many people physically ill when they wear headsets, and may continue doing that even with holograms that are truly immersive and not just projected in front of them.

The deficiencies of the Star Trek set are more apparent when doing this kind of close-up of control panels etc. Set designers will have to up their game if we’re going to have these kinds of renders, so real world work isn’t going away, it’s going to be more necessary then ever.

Still, this is wonderful work and exciting for the future.

So, how soon ’till we get the FTL starships? 🙂

https://www.youtube.com/watch?v=D-CSQY41F04

[ Anyone thought about enhancing computing system interaction from keyboard&mouse|touchpad with ‘haptix’ (e.g. ‘https://haptx.com/’) sensory(&feedback) gloves? ]

[ Transcutaneous Electrical Nerve Stimulator (TENS) would be another approach, while probably also printed circuits on textiles for contracting on a length dimension could be another attempt for simpler&lighter version?

‘Abstract

This paper outlines the development of e-textile haptic feedback gloves for virtual and augmented reality (VR/AR) applications. The prototype e-textile glove contains six Inertial Measurement Unit (IMU) flexible circuits embroidered on the fabric and seven screen-printed electrodes connected to a miniaturised flexible-circuit-based Transcutaneous Electrical Nerve Stimulator (TENS). The IMUs allow motion tracking feedback to the PC, while the electrodes and TENS provide electro-tactile feedback to the wearer in response to events in a linked virtual environment. The screen-printed electrode tracks result in haptic feedback gloves that are much thinner and more flexible than current commercial devices, providing additional dexterity and comfort to the user. In addition, all electronics are either printed or embroidered onto the fabric, allowing for greater compatibility with standard textile industry processes, making them simpler and cheaper to produce.’

another study on ‘liquid crystal elastomers’ summarizes:

‘ Various soft actuators have been widely developed in past decades, including pneumatic actuators, hydraulic actuators, ion-exchange polymer metal composite materials (IPMCs), dielectric elastomer actuators (DEAs), hydrogels, liquid crystal elastomers (LCEs), and shape memory alloys (SMAs)’ ]