Machines with human-level intelligence should be able to do most economically valuable work. This aligns a major economic incentive with the scientific grand challenge of building a human-like mind. Here Sanctuary AIs CEO Geordie Rose and CTO Suzanne Gildert describe their approach to building and testing such a system. Their approach comprises a physical humanoid robotic system; a software based control system for robots of this type; a performance metric, which they call g+, designed to be a measure of human-like intelligence in humanoid robots; and an evolutionary algorithm for incrementally increasing scores on this performance metric. They introduce and describe the current status of each of these. They report on current and historical measurements of the g+ metric on the systems described here.

What problems would the world present to a humanoid robot faced with the problems humans might be inclined to relegate to sufficiently intelligent robots of this kind?

Most of the tasks that humans perform for pay could be automated… Machines exhibiting true human-level intelligence should be able to do many of the things humans are able to do. Among these activities are the tasks or “jobs” at which people are employed. We can replace the Turing test by something the “employment test.” To pass the employment test, AI programs must be able to perform the jobs ordinarily performed by humans. Progress toward human-level AI could then be measured by the fraction of these jobs that can be acceptably performed by machines.

The Employment Test aligns a major economic incentive (automating labor is by definition the largest market possible) with the scientific grand challenge of understanding the human mind.

An Employment Test for Robots

In 2019 Sanctuary AI began developing an approach to measuring the capability of a robot to do work derived from the O*NET (Occupational Information Network) system (Gildert, 2019). O*NET is a source of information about work and workers developed and maintained by the U.S. Department of Labor/Employment and Training Administration (USDOL/ETA) (ONET, 2023).

This approach is predicated on the notion that economic activity forms a hierarchy of goals that overlaps significantly with the set of all human goals. In their framing of the problem of building a human-like mind, thye make the non-trivial assumption that a machine that can do anything we value enough to pay for must possess a mind with many, perhaps all, of the properties of out own minds. For example, Marcus provides a threshold for a system to be considered AGI, which would easily be passed by a system that could do all work (Marcus, 2022).

Currently there are 19,265 identified tasks (ONET, 2023b). Each task in O*NET is labeled by an integer, ranging from 1 (“Resolve customer complaints regarding sales and service”) to 23,955 (“Dismount, mount, and repair or replace tires”). Some integers in this range are not associated with tasks.

Occupations typically require the performance of 20-30 different tasks in order to do everything the job requires. Selecting for example the Retail Salespersons occupation, we obtain 24 tasks (see Table 1) (ONET, 2023c). O*NET contains similar breakdowns for every occupation tracked.

In the O*NET taxonomy, there are 33 skill types, 52 abilities, and 35 categories of knowledge; all of these are scored on a scale from 0 (none) to 7 (near the top of human performance). Lists of O*NET skills, abilities, and knowledge are included in the O*NET Skills, O*NET Abilities, and O*NET Knowledge tabs.

The g+ Score

A work fingerprint is a 120-dimensional vector of integers, each in the range 0 to 7. While this is the fundamental vector we track, we additionally define a scalar that is useful for tracking progress over time. They call this scalar g+ (pronounced g plus).

To compute g+, first note that as we have subtask fingerprints for all occupations in O*NET, it is straightforward to first compute the sum over all 120 dimensions for each of these (giving a number between 0 and 120*7 = 840) and then take the average over all 1,015 occupations (doing so results in a mean value of 267.3).

They then define g+ to be the sum of all 120 scores in either a work or subtask fingerprint multiplied by 100/267.3. This normalization makes it so that a score of 100 is equal to the average value across all occupations. g+ ranges from 0 to 314.3. The standard deviation of minimum g+ scores required for occupations is 15.4 (see O*NET Occupations Ranked by g+ in (Data, 2023)). This makes the distribution of g+ required to perform occupations very similar to IQ, which has a defined mean of 100 and standard deviation of 15.

The subtask g+ scores for Producers and Directors; Poets, Lyricists and Creative Writers; Cooks, Restaurant; Computer Programmers; Mathematicians; and Models, are 102.0, 84.5, 91.3, 91.7, 100.9, and 44.7 respectively. The Model g+ score of 44.7 is the lowest across all O*NET occupations. This means that a person or humanoid robot with a g+ score of less than 44.7 is not capable of performing any full occupation, regardless of distribution of strengths and weaknesses.

A person who successfully performs all five of the Producers and Directors, Poets, Lyricists and Creative Writers, Cooks, Restaurant, Computer Programmers, and Mathematicians subtasks would have a g+ score of 143.9.

Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology.

Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels.

A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.

I tried to comment and was cut off. I hate when that happens. So, I’ll try again and say less (perhaps that will help) this time). Two issues I see. One is our current electronics which is not adaptive, or self improving. This requires physical morphological change in structural materials. Changing the SW, ain’t good enough. To speak to this article so many think having enough data, specifically if you can connect the dots is “intelligence” IMO, that just having a lot of “data” is not “intelligence”. Information is what we do w/the data, and adapt when we DON’T have any data.

Or we have so much data, we don’t know what’s really important. That’s when one needs a judgement call. The ability to make sense of what we don’t know. Intelligence IMO is associated with self awareness. “This is me, and that’s you, and that’s the rest of the universe” To me, “smart” is knowing you are, and (this is SO IMORTANT), so are many others. It’s called having an ethical center, which is a very related but much more complex and important subject. I don’t think our current technology comes close to “being” this.

Can/will a robot be allowed to be a licensed barber?

What will dentists/doctors do to legally FORCE owners to NOT use their bots to provide medical services?

Should everything a bot sees/hears be recorded and saved for future “police/security” reasons (hysterical mothers screaming “think of the children”)?

If a bot commits a crime, who is legally responsible?

Only when you trust a barber, who might kill you if there’s a power failure… Ooops…

You ask many appropriate questions. I’ll take them one by one. I wouldn’t have a problem w/a robot barber, as long as controls were in place so if I did something “unanticipated”, I wouldn’t get my throat cut. (of course straight razors w/out guards are so rarely used today, good thing) I wouldn’t be so worried about robots then I would drunk barbers, w/a straight razor.

As for medical people trying to force people not to use bots for medical services? Just try to do that and see how far that goes. If bots provide better service, your priced out of the action. Deal w/it. As for “recording” what people do, this is more problematic. For any machine (or living being) to “learn from it’s past experiences” it must remember previous events. So how does it “remember” how to make a cup of coffee if it can’t do this?

Perhaps our privacy is not based on what machines can learn, but is based on our ability to limit what we know about how and why they learn things. I want my bot to know I hate decaf coffee. Do I want the police to know something so silly and unimportant? Honestly, I don’t. Why? I’m not sure, w/something so stupid. I just value my privacy.

If a bot commits a crime, and is a threat it must be taken out. Who is legally responsible? I honestly don’t know.

Adoption timeframes are definitely impactful.

There are probably >100M high income laborers in the rich world. Even a wildly successful AI robots industry making 10M per year would take a decade to displace all labor.

Getting from today’s market of zero to a highly disruptive 10M/yr scale is on the order of as difficult as creating a global scale automaker while also solving the AI functionality.

I just want to participate in fractional ownership of these robots… own say, 5% of the revenue stream generated by 20 robots and you’ve got perhaps the equivalent to what a retiree gets on Social Security.

Another important point, when people say “workplaces are designed for humans” that is a justification for the humanoid form but it is only slightly true. Workplaces are designed for the people who design workplaces and have no idea how they will be used which is why workers do acrobatics to get heavy cases into and out of storage spaces. I can’t speak for big centralized warehouses you see with lots of robots but the closer you get to the individual shop level for small and medium businesses the less human friendly the workspaces are so if you are relying on robots to do well because they are built like humans you are in for a surprise.

What kind of computing resources would be needed at the robot end to enable this AGI or do they need a fast internet connection and some super cluster? Either way might the energy usage just for computation be a limitation?

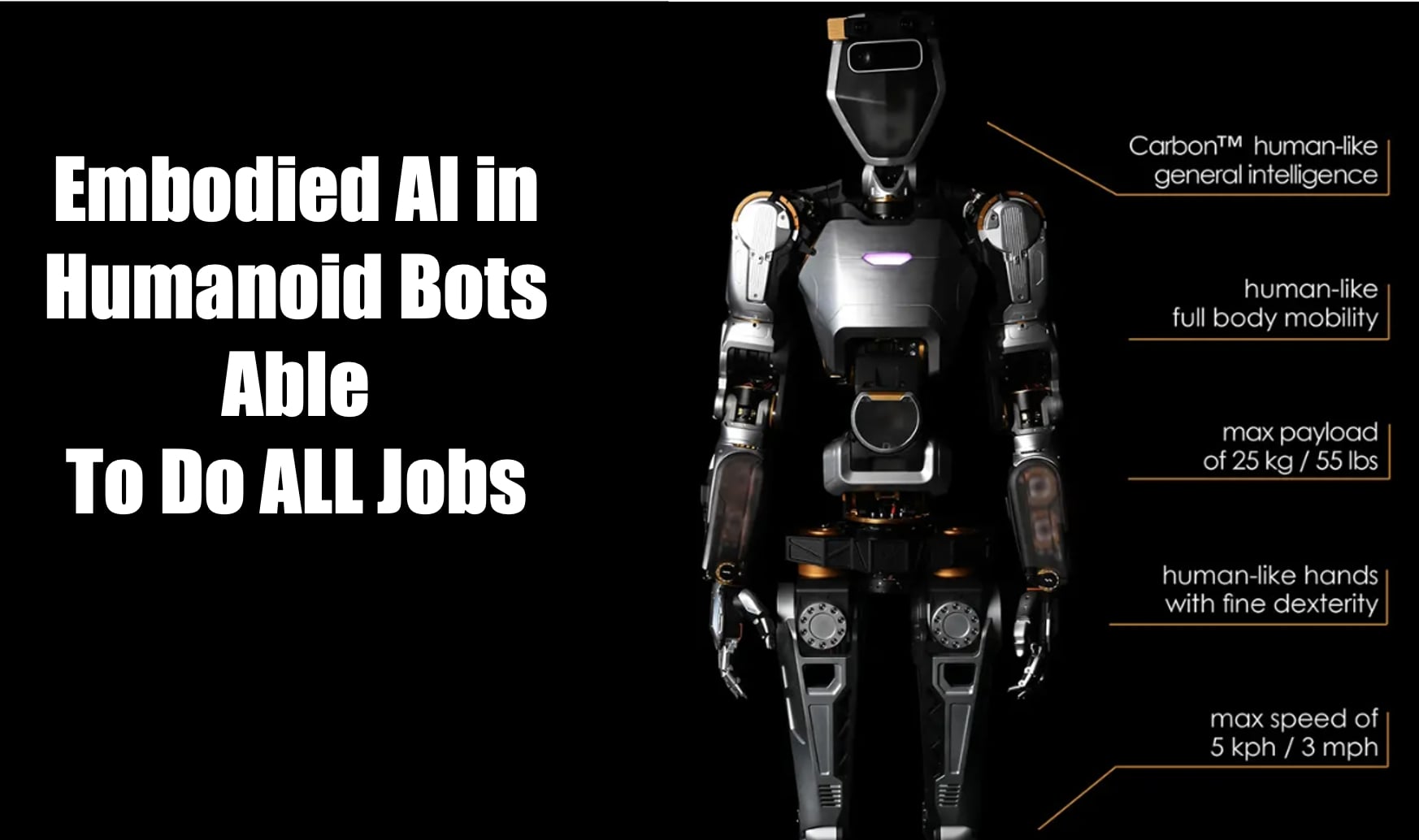

Make those specs OBLIGATORY to all mobile robots.

If all robots maximum speed is like C3PO walking, you can outrun them. And if their max payload is 25kg (although the definition of payload is loose) you may outstrenght them if needed.

Nice as the image is, why is it labeled as having “human like general intelligence”? That’s what is commonly known as “a lie”. 😆

I will start to take claims of “able to do all/most jobs” more seriously when they start to be able to do some/any economically valuable jobs in a real world, competitive setting. When people start using them without being part of a project to demonstrate how useful they might be—that sort of thing.

No one is yet lining up to have a machine stand in front of their Keurig machine, waiting for someone to put a coffee cup under it and hand them a pod so they can load it in the machine and then wait for the human to remove the cup when it’s done. No one is lining up to have robots sort coloured blocks into coloured trays. Even the latest video of Atlas moving shocks is designed to look like work when it is just moving the parts from a container to an identical container. That is theatre, not work. Digit looks like it is doing work but it is in a fenced off area and is doing something that could be done by a robotic arm and a robot cart without the humanoid or—more cheaply and quickly—by a human. Both optimists and pessimists are talking as if bots have already demonstrated competitiveness in the labour market and that they can already do “everything” when they have yet to do anything.

I don’t doubt they will start to do something eventually but we have no idea how much, how soon or how universally.

If you were born 150 years earlier, you would say the same of planes : they can’t fly far, not fast, very dangerous, can fly one or 2 people at once, and so on… Technology and science doesn’t develop in a day or a even a year. It takes time, a lot of time.

That’s my point. People are talking about millions of humanoids in the workplace in the next year or two. If they were ready to go today (which they definitely are not) the funding approval alone would take a couple years at least. Then the implementation planning. Then the process of redesigning companies and computer systems… the people who will be replaced by humanoid robots likely haven’t been born yet or are still learning to walk themselves. The reports that will be justifying the conversions will be written on paper that is still growing as trees in forests.

If people had been proposing a similar timeline for aviation 150 years ago I’d have been right to be skeptical because it completely didn’t work out like that.

I think it’ll move faster then you think. Compute for AI is doubling every 3-6 months. AGI is currently viewed as likely to happen around 2027. I’m sure a Teslabot 3 years out will be very refined. Now install a brain in that bot, that is (overall) smarter then any human, and yes, they will take your job. Scaling will of course be a challenge, but they will be far easier then building a car. Plus they will be used to build themselves as well. By 2030, I’d bet the run rate to be around 50 million a year, I’m talking globally.

People born 150yrs ago, as people living today have the same problem: Technology that is anyone “so knew” that can’t recognize let alone understand the words to describe it. Actually, technology and science change in a moment. Those “AH HA” moments, when we discover or recognize new technology, can and do happen in a moment. Honestly, so much of new discoveries, including new tech are NOT based on plodding goals w/in known pre-conditioned objectives.

Perhaps your looking at the public accepting new ideas and technologies. IMO, people accept new technologies far, far faster then they accept new ideas. New tech is fun. New ideas are scary.