Arxiv – The development of artificial intelligence systems is transitioning from creating static, task-specific models to dynamic, agent-based systems capable of performing well in a wide range of applications.

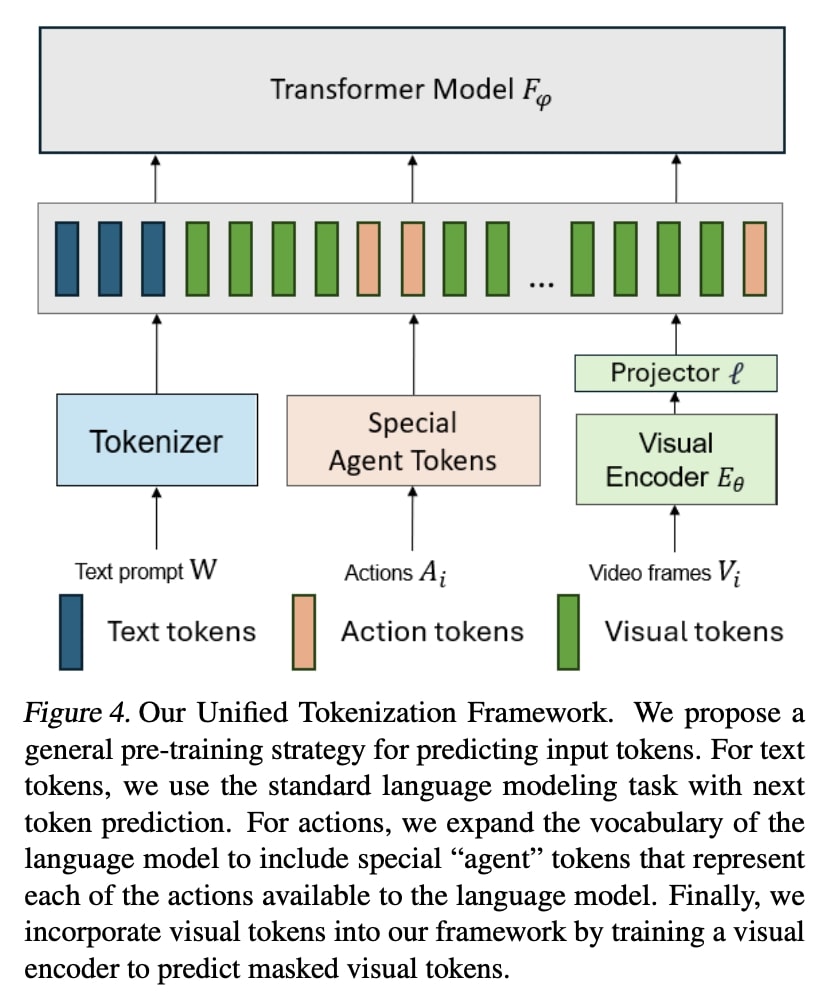

Microsoft researchers propose an Interactive Agent Foundation Model that uses a novel multi-task agent training paradigm for training AI agents across a wide range of domains, datasets, and tasks. The training paradigm unifies diverse pre-training strategies, including visual masked auto-encoders, language modeling, and next-action prediction, enabling a versatile and adaptable AI framework. They demonstrate the performance of the framework across three separate domains — Robotics, Gaming AI, and Healthcare. The model demonstrates its ability to generate meaningful and contextually relevant outputs in each area. The strength of our approach lies in its generality, leveraging a variety of data sources such as robotics sequences, gameplay data, large-scale video datasets, and textual information for effective multimodal and multi-task learning. The approach provides a promising avenue for developing generalist, action-taking, multimodal systems.

They define the embodied agent paradigm as “any intelligent agent capable of autonomously taking suitable and seamless action based on sensory input, whether in the physical world or in a virtual or mixed-reality environment representing the physical world”.

Importantly, an embodied agent is conceptualized as a member of a collaborative system, where it communicates with humans with its visionlanguage capabilities and employs a vast set of actions based on the humans’ needs. In this manner, embodied agents are expected to mitigate cumbersome tasks in virtual reality and the physical world.

They believe such a system of embodied agents requires at least three key components:

1. Perception that is multi-sensory with fine granularity. Like humans, multi-sensory perception is crucial for agents to understand their environment, such as gaming environments, to accomplish various tasks. In particular, visual perception is useful for agents that can parse the visual world (e.g., images, videos, gameplay).

2. Planning for navigation and manipulation. Planning is important for long-range tasks, such as navigating in a robotics environment and conducting sophisticated tasks. Meanwhile, planning should be grounded on good perception and interaction abilities to ensure plans can be realized in an environment.

3. Interaction with humans and environments. Many tasks require multiple rounds of interactions between AI and humans or the environment. Enabling fluent interactions between them would improve the effectiveness and efficiency of completing tasks for AI.

This paper presents the initial steps on making interactive agents possible through an Interactive Agent Foundation Model. They do not foresee negative societal consequences from presenting and open-sourcing the current work. In particular, the main output of our model is domain-specific

actions, such as button inputs for gaming data, making the downstream applications of our model different from those of standard LLMs and VLMs.

In the domain of robotics, Microsoft wishes to emphasize that this model should not be deployed on real robots without more training and additional safety filters.

In the domain of gaming, downstream applications of the foundation model may have some societal consequences. Smarter, more realistic AI characters could lead to more immersive worlds, which can increase players’ enjoyment in games, but may also lead to social withdrawal if not used appropriately. Specifically, more realistic AI characters could potentially lead to video game addiction and players anthropomorphising artificial players. They encourage game developers who build AI agents using these models to mitigate these potential harms by encouraging social interactions between human players and applying appropriate content filters to AI agents.

In the domain of healthcare, they emphasize that the models are not official medical devices and have not gone through rigorous testing in live settings. They strongly discourage using the models for self-prescription. Even as the models improve in future iterations, they strongly encourage keeping a medical practitioner in the loop to ensure that unsafe actions are avoided. As the models continue to develop, they believe that they will be useful to caretakers, especially by automatically forming drafts of documentation and notifying caretakers when patients may need urgent attention.

Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology.

Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels.

A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.

Interactive AI agents may be effective when dealing w/cause and effect, but how effective is it w/dealing w/effects w/out knowing the cause? You have to ask the right questions, to get the right answers. Just because a computer is very fast, does not means it’s creative. Being creative is critical to solving problems you never anticipated. I don’t know enough about AI, to know if it can do this, hey I’m being honest. But I have my doubt’s.

“Interactive AI Agents that Makes Decisions and Takes Actions”

Grammar, Brian! They have grammar checkers now, how in the world did that not get flagged?

Anyway, autonomy, initiative, and embodiment; These are three things that are really dangerous to combine in the same AI.

[ VLMs

‘Vision-Language Models for Vision Tasks: A Survey’

‘https://arxiv.org/pdf/2304.00685.pdf ‘ ]