The number of Nvidia H100 and other Nvidia chips that an AI company represents the AI compute resources of that company.

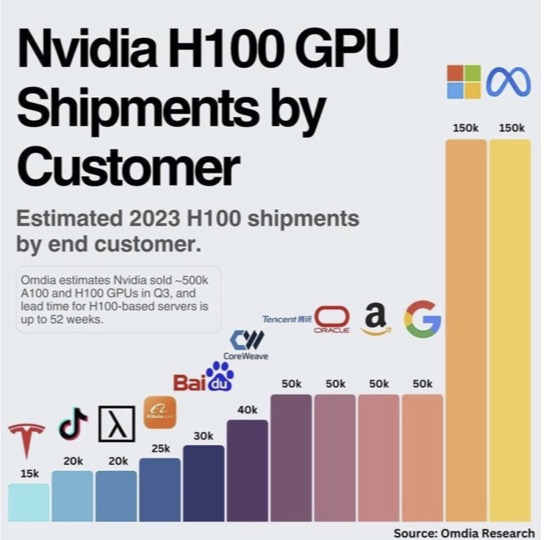

Elon indicated that a recent chart showing Meta leading on the GPU count and Tesla trailing at 10,000 G100 GPUs. Microsoft and OpenAI would also have higher GPU counts. It is unclear why Microsoft -OpenAI GPU counts were not included in the State of AI report.

The 350k H100 GPUs for Meta would be about 1.4 Zettaflops.

24k H100s is about equal to 100 Exaflops of AI compute which is mostly FP8 or FP16 calculations.

Elon said XAI was using 20,000 Nvidia H100s to train Grok 2. This training is happening now and will be done by May 2024.

100,000 H100s are need to train Grok 3 and this will be about at the level of OpenAI GPT 5 level. This is the level of tokens where companies run out of realworld data. Companies will then need synthetic data and realworld video to train their models.

If XAI were to start training Grok 3 as soon as possible after Grok 2 then XAI would be purchasing and installing another 80,000 H100s or H200s or B200s.

I would estimate that Tesla and XAI have over 50k H100s equivalents and are in the process of acquiring and installing another 100-200k worth of H100s.

XAI and Tesla and the other companies would all be acquiring Nvidia chips to get to 200,000 H100 equivalent or greater by Q3 of this year and to 1 million or more by next year.

This is not accurate. Tesla would be second highest and X/xAI would be third if measured correctly.

— Elon Musk (@elonmusk) April 8, 2024

Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology.

Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels.

A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.

Will Groq (not Grok) replace Nvidea GPUs with LPUs, purportedly 100X faster, dedicated Language Processing Units?

https://wow.groq.com/why-groq/

It is amazing how Chinese people always find an oversimplified and misleading method of quantifying progress. Too much conformity programming?

I thought Facebook already was mostly bots?

Why does it need more AI?

Does Meta have any kind of AI product being released? I mean apart from AI-Orwell?

It would seem not. There is no “product” anyone can get, no technological “toy” beyond what we currently have. When AI generates a “thing” that makes my life better or at least more fun I’m sure I’ll be the first to know. (As I’m sure so will anyone else who gives a damn.) So far, no dice. Still waiting…