Ate-a-Pi has a weird claim that Mark Zuckerberg does not believe in AI compute scaling delivering better performance.

There is also a claim about energy constraints for data centers and AI chips maximizing on performance per watt.

Zuckerberg says that the exponential curves are still scaling. He says no ones how long that scaling continues. He says that Meta will keep scaling data centers and the compute as the scaling continues.

Microsoft and OpenAI are talking about separating data centers for GPT6 to workaround the AI compute and energy issue.

They use this old chart of gigaflops per watt.

However, the newest H100 has 700-2800 Gflops per watt, which is 7 to 28 times better than the 100 gigaflops per watt at the end of 2020. The new B100 has 1300 Gflops to 10,000 Gflops per watt. This is 2 to 4 times better than the Nvidia H100. There is data from SemiAnalysis and Nvidia on those performance improvements. Obviously, Meta, Tesla, Microsoft, Amazon and Google are trying to make their own chips to reduce the 77-79% margins they are paying Nvidia. However, the dependence upon Nvidia is not reducing because of the rapid improvements with Nvidia chips.

Meta is buying and has bought over $10 billion in Nvidia compute chips in 2024.

If Nvidia continues to improve the compute per watt then the upgrade cycle will continue.

TSMC is supplying most of the AI chips a the 5 nanometer node and TSMC is shifting 5 nanometer lines to 3 nanometer. Intel plans to get competitive at 3 nanometers in 2025. TSMC will have the 2 nanometer ready for AI chips in 2025.

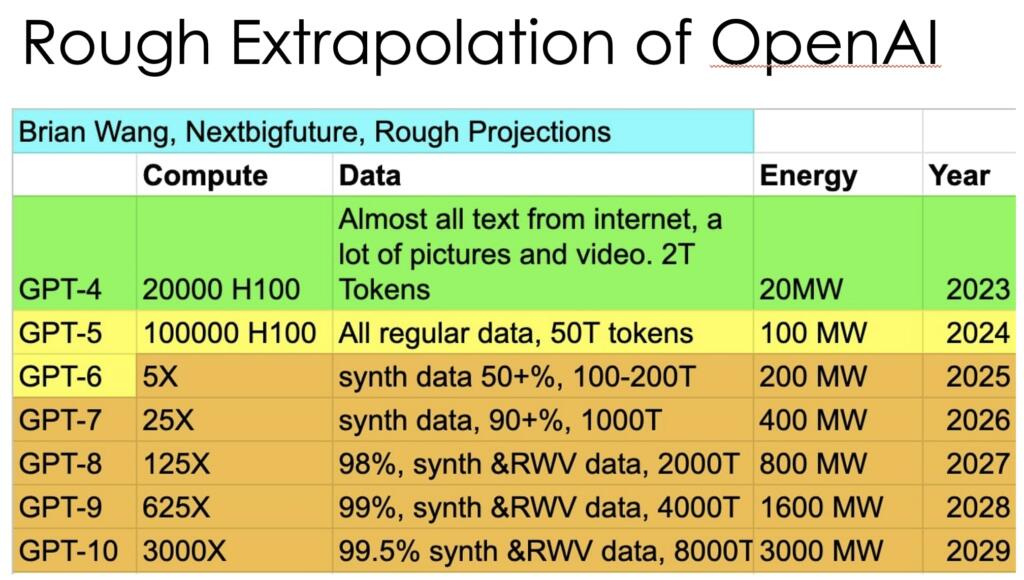

I project a 5X per year increase in compute with doubling chip efficiency in compute per watt and doubling or more the size of the data centers. China, Tesla and others will resolve the energy available at each data center beyond the 150 megawatt level.

Zuck on Dwarkesh

TLDR: AI winter is here. Zuck is a realist, and believes progress will be incremental from here on. No AGI for you in 2025.

1) Zuck is essentially an real world growth pessimist. He thinks the bottlenecks start appearing soon for energy and they will be take… pic.twitter.com/gwi7DdRLDT

— Ate-a-Pi (@8teAPi) April 20, 2024

Zuckerberg said in the interview with Dwarkesh that they were still seeing performance gains as they scale the compute.

Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology.

Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels.

A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.

That a digital, binary mindset engineer has realized biology is not so simple. 1/0’s, yes/no, on off. Nothing biological “works” using those principles. That’s refreshing. By extension, social behavior is an extension of the innate plasticity of biology (aka: living, adaptable things).

How many data miner’s does it take to change a light bulb? I’m sure AI and it’s previous incarnations can tell me what a light bulb is, why to replace it, but what living beings care about is when and why it’s replaced. These are the finite details, w/all their data, (the data minors”) they are buried in, They are drowning in data, and can’t see what “matters”. Perhaps looking at “their data”, from how biological systems (including humans) “do this”, may help. Shi* kids it can’t hurt…

Besides the old quote of fusions is 20 years away, and always will be. Theres also the one about fusion will be available in 5 years once we really need it. Maybe this is its ideal use case.

Alternative interpretation:

Zuckerberg is panicking about competition from startups such as ChatGPT and is trying to undermine the investments going in their direction by suggesting that AI/ML/AGI isn’t coming any time soon.

The first tech bro to get the brain spark of mating a 1GW offshore OTEC with a fleet of Project Natick submarine datacenter pods floating like kelp is gonna be rich.

One day we might have a scale for civilizations based on percentage of electricity used for computing. For example: Type 1 – 90%, Type 2 – 99.9%, Type 3 – 99.99%

I think Zuckerbot is correct about this being another AI winter. Getting around the energy barrier requires a new semiconductor technology that we are not even close to developing. Basically some kind of nanotechnology.

Meta is one of the worst companies to get hands on super powerful AI, hope others will be there before them. Even MS is way better.

AMD is launching its chip, but most likely the performance will be something in the range or Nvidia older model such as H100 and lag behind for a year.

Nvidia has a diamond mine here.

They should build their own power plants to power super clusters, so it could be more centralized. Wind can be build pretty fast, but it is not consistent, that is the big problem. Solar again not consistent. Batteries store it for a limited time. Only dirty gas plants would do it fast enough.

Nat gas is a lot cleaner then coal, but if your in a time crunch, wind, solar, megapack batteries, are your only option. You could build a 1GW mixed cluster in a couple years. Could be building the equally massive data center right next to it at the same time.

Here in South Dakota we have loads of wind, and a wind turbine production facility (Marmen) just 15 miles from me.

Those compute core numbers show things like electrical loads of 3GW. Let’s just use 1GW or 1,000MW for arguments sake.

The best onshore windfarms in the world typically hit about 44% capacity factor vs nameplate. So let’s say that for 1GW you will need 2,200 MWe of Wind or 2.2GWe installed at the pole.

Then say you are going to need batteries to even out the availability of that power to 24/7/365. The most efficient batteries can often reach 95% but they age so let’s just say 90% for ease of calculation. Then you end up with needing 2,444MWe at the pole to generate the power required for one 1GW AI compute center.

I’ve got some news here, and that is 2.4GW is a huge wind farm.

You can often have combined cycle gas of 1GWe in a year.

In the case of South Dakota you can do both wind, and gas. So you’re lucky in that respect. Always remember when discussing gas plants that peaking plants (which wind often needs) and combined cycle are very different in terms of fuel consumption.

In any event there is no path forward without nuclear. AI is just one more argument for that case.

If the huge hurdle is energy consumption and they are limited by how much energy grid can handle – problems with supercomputers being fractured in different locations, they should build their own plants.

In a few years, when they will need 1000 + MW, they really could build new power plants. They can probably resell them later on to the state. If they are spending 10+ billions for GPU’s then they won’t have trouble spending a few billions on the power plants,… How big problem is that they cant cluster more than 100k GPU’s together and if they are separated I don’t know. They know cost- benefit.

The nuclear sounds good, but they take like eternity to build.

I don’t believe in “Lizard People”, but if I did- Zuck would be one.

I asked this before, I will ask it again, what is Meta’s AI product that they will use to generate revenue?

AI girlfriends who will like your posts.

They want chat bots on their products like Facebook and WhatsApp, without paying a dime to OpenAI or Google.

The potential uses are a lot. Simulated friends, assistants, customer service, etc.

thats a good question to me. what is the product here that pays for this? Tesla and FSD seems to be at least a feasible one with a def market.