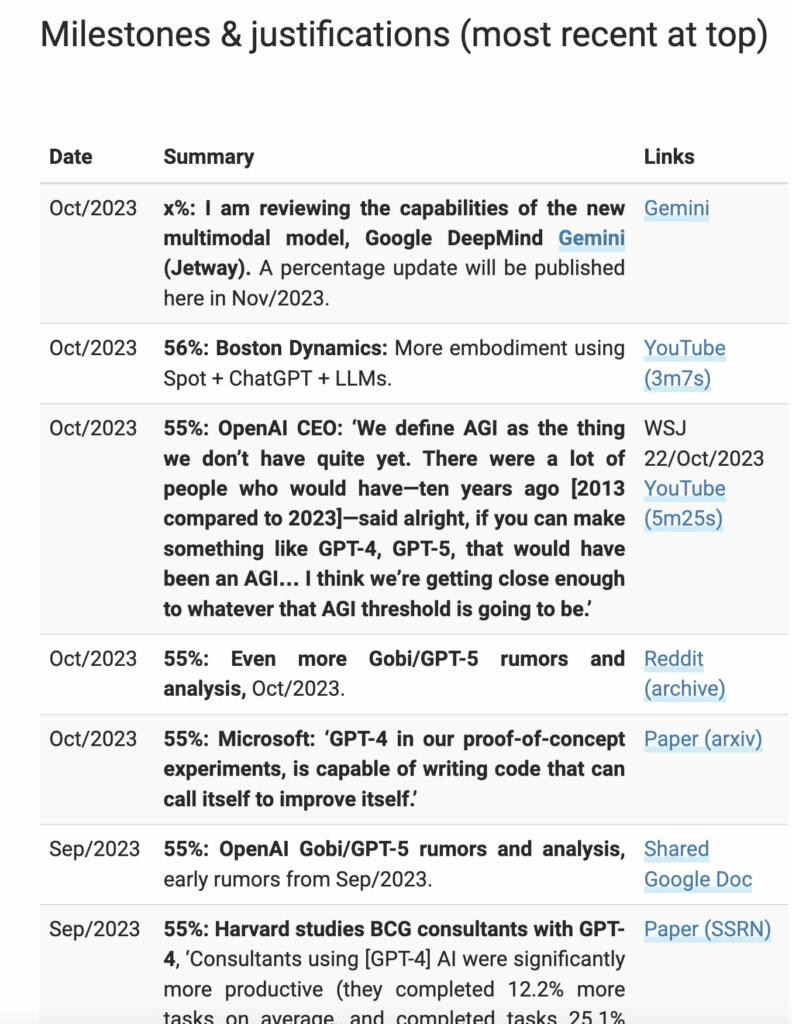

Dr. Alan Thompson has an Artificial General Intelligence (AGI) percentage tracker. Thompson’s tracker indicates that we have 56% of the capabilities for AGI now. He has fit his percentage tracker to an exponential curve and this indicates we will reach AGI July 2025.

Alan Thompson Definitions for AGI and ASI

AGI = artificial general intelligence = a machine that performs at the level of an average (median) human.

ASI = artificial superintelligence = a machine that performs at the level of an expert human in practically any field.

Thompson uses a slightly stricter definition for AGI that includes the ability to act on the physical world via embodiment. There were some approaches on getting to AGI that fully bypass embodiment or robotics.

Artificial general intelligence (AGI) is a machine capable of understanding the world as well as—or better than—any human, in practically every field, including the ability to interact with the world via physical embodiment.

And the short version: ‘AGI is a machine which is as good or better than a human in every aspect’.

As requested by @dralandthompson , here is the data updated from https://t.co/5zW33mS4VO, fitted to exponential growth. Seems we will reach AGI in july 2025? pic.twitter.com/Ei4CFwkBVz

— Dennis Xiloj (@denjohx) June 23, 2023

BeginningInfluence55 used a more conservative polynomial regression on Alan Thompson’s percentage AGI figures. This method says 100% AGI by Oct/2026.

Next AGI milestones according to Dr. Alan Thompson.

– Around 50%: HHH: Helpful, honest, harmless as articulated by Anthropic, with a focus on groundedness and truthfulness. Mustafa Suleyman is the Co-founder of DeepMind, and Founder of Inflection AI (pi.ai), and says: ‘LLM hallucinations will be largely eliminated by 2025’.

Around 60%: Physical embodiment backed by a large language model. The AI is autonomous, and can move and manipulate. This is mainly humanoid robots like Teslabot and Sanctuary AI Phoenix. Extrapolated to be Feb-April 2024.

Around 80%: Passes Steve Wozniak’s test of AGI: can walk into a strange house, navigate available tools, and make a cup of coffee from scratch. Extrapolated to be May-August 2025.

Ben Goertzel, CEO of AI and blockchain developer SingularityNET, believes the advent of artificial general intelligence (AGI) is three to eight years away. This would mean that Ben is predicting 2026-2031 to reach AGI. Goertzel was one of the first to define the concept of artificial general intelligence (AGI) decades ago. Since 2010, Goertzel has served as Chairman and Vice Chairman of Humanity+ and the Artificial General Intelligence Society. SingularityNET is Ben’s company for developing the AI improvements towards AGI.

“I would say now, three to eight years is my take, and the reason is partly that large language models like Meta’s Llama2 and OpenAI’s GPT-4 help and are genuine progress,” Goertzel told Decrypt.

Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology.

Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels.

A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.

My engagement with Language Models (LLMs) has led me to question the often misunderstood concept of “intelligence” attributed to them. Contrary to popular belief, my experience suggests that LLMs don’t inherently possess intelligence; instead, they adeptly compile and organize data sourced from their accessible reservoirs. They also make simple errors which humans mostly do not.

Machine learning, a subset of artificial intelligence, is a formidable force that allows machines to glean insights from data without explicit programming. It has the capacity to perform intricate tasks like image recognition, natural language processing, and predictive analytics. However, it’s crucial to distinguish machine learning from human thought processes—it doesn’t engage in reasoning or thinking as humans do. Rather, it relies on algorithms to recognize patterns in data and subsequently make predictions based on these identified patterns. Essentially, machine learning serves as a sophisticated statistical analysis tool, streamlining decision-making processes through automation.

It is very important to note that artificial intelligence lacks sentience; it lacks its own intrinsic purpose or goals. Instead, it stands as a versatile tool crafted to accomplish specific objectives, be it optimizing efficiency, cutting costs, or enhancing overall quality of life. This very crucial point is very seldom considered in the hype over AI. This key point is why I believe we are unlikely to be destroyed by ASI.

Despite my view that AGI/ASI does not have a purpose, it’s a huge force for good.

These technologies are not just abstract concepts or hypothetical musings; they are actively fueling tools that can revolutionize and elevate business decision-making processes. The transformative capabilities of AI and ML are not merely futuristic notions—they are here, proving their mettle in the market, and are increasingly tailored to meet the specific needs and challenges faced by businesses. Embracing this technology isn’t an exercise in speculation; it’s a pragmatic step toward giving business processes a substantial and tangible upgrade.

Spot on, you’ve identified the issue conflating & confusing most. Moreover, whilst almost all ai researchers are working on ai compute power/efficiency (intellect/watt), few are working on will (consciousness, self awareness etc). As far as I’m aware, we have no clue as to how consciousness arises or even in which part of the brain it is located.

I do not believe that anything which, when not being told to perform a task or prompted to think about something, sits there in an inert state can be considered intelligent. Not in a general sense, not in a narrow sense, not in any sense. It simulated intelligence by predicting an output appropriate to an input from statistics.

We are learning that many things we thought required intelligence to do because using intelligence to do them was our only option are actually able to be done through unintelligent but profoundly powerful network software. That’s great if we don’t mistake that for actual intelligence rather than simulated intelligence. The difference is how much it is safe to rely on it to work unsupervised.

Maybe we will get real machine intelligence, maybe one day soon. Maybe multimodal networks will help develop it but they won’t be it. One thing these systems have in common with the old “chat” bots they were named after is that they are meant to fool people into thinking they are intelligent and that you are conversing with them. That is their primary purpose and they have achieved it to such a degree that even their creators mistake them for intelligent but it isn’t so.

[ AGI or ASI could stack its tasks from verifying common available statistics and combine with (media) network traffic for human centered progressive development, but that (then) is not independent ‘general (super) intelligence’ in advance of human intelligence (what’s difficult to define anyway, or a try for to explain with a known example, ‘What’s the (economic/commercial) value of E=m*c^2’ within a human defined time scale, e.g. (500yrs ago, ~1897), 1905, ~1950’s or 2150’s?) ]

[ for not being misunderstood:

‘(media) network traffic for human centered progressive development’ is no measure for quality of human decisions or usefulness (‘intelligent’) on agreed(?) assumptions/precautions/priorities for a defined period of time, but could be summarized like being a momentarily/present expression of democratic volition (with adding notice for variance of error and multiple testing/re-testing/sources for reference

(in politics this principle is known being ‘direct-democracy’ and not many constitutions implemented (or progress towards) that mainly, including safety precautions) ]

I’m not going to call it “intelligent” until it can learn in an unsupervised environment, starting from zero. Which is something humans are capable of doing.

The current AI approach is like using a billion years of selective breeding to produce an insect that can mimic a human. It’s kind of parasitic on having a huge training set of actual human behavior to copy.

That makes it very good at looking like it’s intelligent, until you look at the mistakes it makes, which reveal that there’s not really any intelligence there, just mimicry.

Mind, mimicry is probably good enough for a very large domain of tasks… Very little that humans do day to day requires much application of intelligence. Intelligence is very computationally expensive for humans, it’s more of an error handling routine than a normal mode of operation. No reason not to expect that the same would be true for AIs.

Hi Brett,

Newbirn humans are fundamentally unable to survive alone and they need to be taken care of for years by humans that tend invest tens of thousands of hours in supervised teaching in the next generation. Leaving all the other AI considerations humans are not a good example of intelligence from unsupervised learning. The only good example in that area are octopi that being extremely solitary develop and learn all by themselves. All the other intelligent animals are social and their learning is somehow supervised.

When you compare the data sets these AI’s have to go through to learn anything, to the amount of bandwidth a human has over their lifetime, you’ll see that there’s a huge disconnect: Human learning is enormously more efficient.

One could argue that human learning took billions of years, encoding whatever was possible to encode, through evolution and natural selection and leaving the last few thousand of years of training to be distilled into a culture that is transmitted to the new generations dedicating few tens of thousands of hours.

If we cut ourselves we tend to lick the wound and that is instinct hardcoded through hundred of thousands of years of evolutionary training (because the saliva contains lysozyme which is an anti-bacterial), if we were handling poisonous mushrooms when we cut ourselves we know that we should not lick our finger, andthat information is the distillate of trial and error on which mushrooms were edible and which one were not, that got transmitted through culture and then was taught to you in few sentences. That is the very last layer of the training, but even in that case most of the random testing was done before.

I agree, mimicry is going to be a big deal when we are able to automate tasks that we thought required intelligence just because we never had an option to complete the task without using an intelligent human. It’s unfortunate that it needs to be called “intelligence” (with all the baggage that carries like innate human fear and loathing of intelligence) in order for people to begin to understand how important it will be, but there you have it.

I agree; to use the old Aristotelian classification, these entities have intellect but lack will. And while some scholars worry that we simply haven’t recognised their will and our complacency will allow some sort of existential threat to appear, I cannot really see that this might be the case, since I’ve seen plenty of security researchers prompting LLMs to jailbreak themselves and getting a functional jailbreak, but the LLMs never have been reported to actually take any action unprompted, surprising or not surprising. At most, they’ve hallucinated.

I as a meat bag for one hail our imminent AGI overlords .

Jokes aside while the current ai levels are still lacking some integrity and coherence they are already performing better than a sizeable part of human population. They are good enough to soon cause huge waves and displacement in human employment. Without any real AGI. The only thing stopping hard liftoff of the post human intelligence can be the death of the Moore’s low

It’s evolving way faster than Moores law. A freeze on cpu would only delay it a bit.

Anything else has already been said here

https://waitbutwhy.com/2015/01/artificial-intelligence-revolution-2.html

Um no it is just using more GPUs. Fundamentally its GPUs are following Moore’s law.

One does not simply violate Moore’s law by adding on more machines.