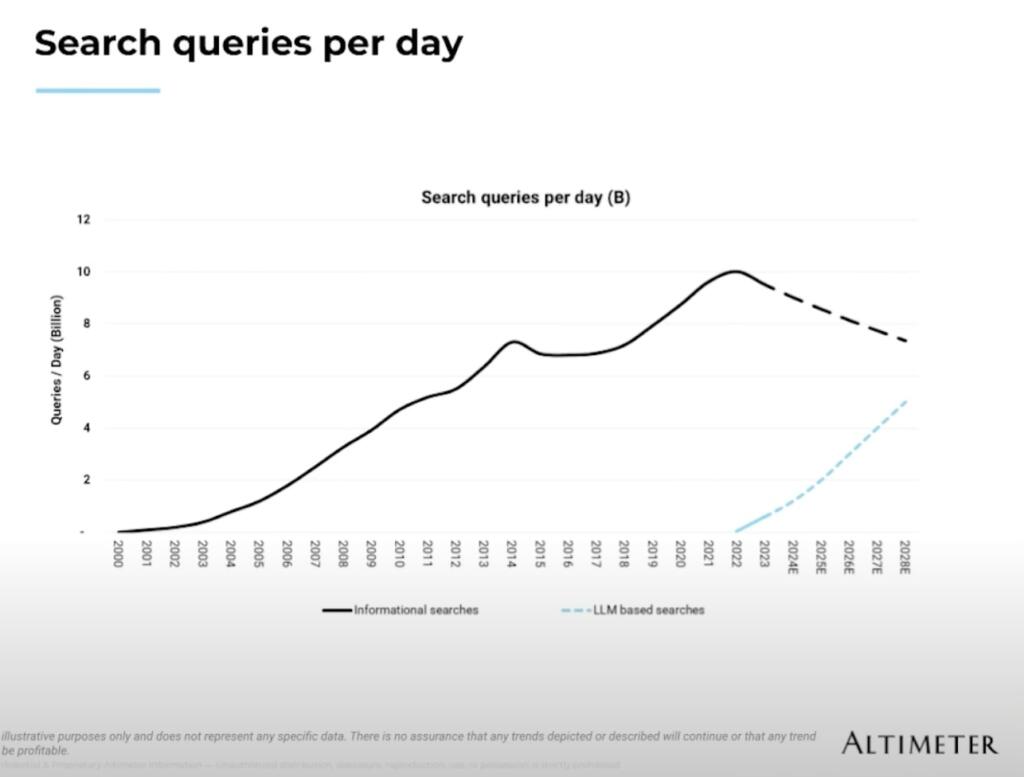

Brad Gerstner at Altimeter Capital describes how the large language models (LLM) like ChatGPT could replace Google Internet Search. The cost per llm query is about 10 to 100 times more than the internet search. However, by consistently getting the cost for LLM query down to 1 to 2 cents then those queries would be consistently profitable. This replatforming would change the market share for AI query search versus the domination of search by Google.

The battle for AI search market share will be made over the next 2-3 years and the market shares will shape the technology landscape for decades.

Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology.

Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels.

A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.

For search I only wish for dumber search which actually searches and presents result for what you explicitly asked without any DIE scoring, creative reinterpretation, “authoritative sources” (where things like UN are always placed first; and social ecosystems like facebook, pinterest etc are always premiered over a random home page; “random mom’s blog” will never be search result #1 unless you explicitly restrict searches with the site: operator)

Google has become vastly worse over time. It doesn’t matter how many operators you throw in to try and force it to search for what you wanted. If it doesn’t generate ads/ sponsored links it will ignore the query and search for something similar. If what you are searching for is obscure it has also pruned the results heavily; you used to be able to scroll and scroll forever until you find what you want. Refusing to respect the search query combined with only having a few pages of results means that anything obscure is off on page 50 and it won’t let you scroll that far regardless of how many millions of results it claims to be getting.

Searching for a specific article or blog post from years ago or for a motherboard manual from the 90’s or something like this is almost hopeless and it used to be easy.

Meh. Search. Pedestrian.

Real power and potential starts with predictions and statistical forecasts beyond data set contents – https://arxiv.org/pdf/2310.03589.pdf

OK – maybe now I am starting to respect AI potential with predictions -and- smartColony collaborations when multiple AIs, doing separate research, cross-talk across disciplines and solve things currently unexpected (to me, anyway) – such as mixing biology and quantum mechanics and therefore solving aging (as an example).

Part of this is what place does advertising have in that new paradigm. One possibility is that if everyone has a personal assistant/agent AI, that agent could know everything about you, be a major part of establishing and defending your identity, and handle business on your behalf under your direction.

For advertising that means it could negotiate with various advertisers based on who you are and what your needs and interests are. You could be paid to listen to sales pitches. Consider a drug company that now spends $100M to reach 100M people in a campaign – 99% of whom have no interest and the relevant 1% provide no feedback and only get a brief simple ad. Instead, if negotiating with AI agents, they could reach just the 1M people who might use the drug, and pay them $100 each to participate in an interactive 10 minute presentation. A much better deal for both sides and the other 99% who aren’t bothered with the irrelevant ads. The consumer only participates when they want in pitches they have some interest in and are paid for it. The ad buyer gets high quality interactive information and every pitch is a real opportunity to sell and informs a potential buyer. The pitches themselves would be conducted by AI.

The first, the very first thing I’d want, for a personal AI assistant/agent, would be it running on my own local hardware, with the option to opt out of updates. If it’s running on somebody else’s hardware, the safe assumption is that it’s working for that somebody else, not you. And I don’t want to get sucked into relying on an AI, only to have the latest update brick it, or more likely subvert it.

Good point! Companies are going to do everything they can do, permissible by law, to turn a profit. The only way to protect yourself from a company trying to brick your system is to “airgap” it. That’s why I love this forum, so many good ideas.

So in networking “Airgap” literally means no internet. AI with no Internet to pull stuff from is by definition; tits on a bull. Also if there was no profit margin then you would a. not have any AI agent, and b. if you magically had one put together by the Chinese or even our NSA here it would have far greater issues with it then just corporate greed.

I think this will be the selling point for iphone 16 and high end new Android phones.

The quality of search has already dropped off a cliff for all major players thanks to generated content. No thanks.

I boycott Google Search and Google News for three years now. Search engines are making room for new players.

Well from a business point of view google will not be the first to voluntarily adopt, a big source of income for them comes from placing paid results in the list you manually have to scroll trough, curious how they will revise their business model and adopt to the unstoppable ai train competition also hops onto.

Admittedly, when your search results for a query contain unrelated sponsored results, it’s easier to blow it off as Google’s algorithm being glitchy, than if an AI generates an essay on the dead Sea scrolls and goes off on some tangent about ED pills.

You’d be as wrong to attribute it to a glitchy algorithm in the first case, of course.

How does a company monetize AI answer bots then? Wrap the answer in banner ads?

Easy, just suggest use of Coke when prompting “Suggest an experiment to demonstrate the concept of viscosity and the laminar to turbulent flow transition”. They are going to do it with the cost of compute.

How about, you know, just charging you by the query, or a monthly fee, or something that doesn’t involve them distorting the results to obtain revenue?

This looks promising on paper. But, the large scale replacement of traditional search queries in favor of LLMs would require that an LLM not show bias. I understand that Google has the ability to tweak their search data and what they provide (and obviously search engines can be built to bias specifications), but I see the potential for an LLM to do it in more of a reactive way.

And there’s another side to this. It may also be possible to work with an LLM, converse with it, until you convince it to show you search results that it would not and should not otherwise show you. This would have both positive and negative potential thst is wholly dependant upon the user.

No, it wouldn’t. They only need to be better than existing search engines which are either terrible due to being small upstarts or terrible due to DEI bias, “authoritative sources” bias, bias that premiers ad-infested search engine optimized listicles and generated nonsense over real content and a few large social media sites (facebook, pinterest etc).

You used to be able to search for and find very obscure content if you knew how to use the different operators, but now operators are just a suggestion and it creatively misinterprets whatever you write in a way so as to maximize ad-infested listicles and sponsored links and minimize relevant results.

This takes your “inquire” away from you. I believe you can’t get the right answers, w/out asking the right questions. This consequence goes down the toilet when anyone or technology makes a “value judgement” on the very questions you ask. You won’t get actual effective answers if the person or technology hearing your questions, judges them to be unimportant, stupid, or just wrong. No honest question IMO is ever “wrong”. But some answers can be so seriously dumb ass.

Technology has the acceptable habit of making dumb ass answers unquestionable. Scares me poop-less…

+100

This would be a bad idea. When people search, they are looking for information, not disinformation, and LLMs too often given the latter. Yes, it’s good to have essay type answers sometimes. But mostly, it’s better just to have links to relevant websites that have actual experts on the topic searched for to dig deeper into whatever is needed. Or even just stuff to buy or to fix etc. I don’t need the LLM middleman.

From what I’ve seen this would be horrific, on an Orwellian scale.

Granted, based on the performance I’ve seen so far, it would really be good at digging up any information the people who programed the LLM didn’t mind you finding. Even at the present level of development, LLM’s are good for finding you recipes.

But the moment you get into something even only a lunatic would think was politically fraught, you’d hit a brick wall. A brick wall painted over to look like there was nothing to find, probably, but a brick wall.

A Potemkin internet lies in our future, apparently. And I do mean “lies”.

Google already filters the internet in many ways so the filtering is already here. So it depends on which LLM is also filtering the internet and I agree that most are horrible. Grok does a good job of being a user-value-maximization LLM so there’s hope. If people make a LLM that has the goal of helping users instead of reeducating them then this can indeed work.

Yes, IF. You’ll notice that most of the companies pursuing this are on the reeducation side of the scale. All in to the point of absurdity.

It’s pretty dangerous that basically the only major player in platforms AND AI that isn’t all in on censorship and reeducation is controlled by one overworked middle aged guy. And he’s having to fight the rest of the IT ecosystem just to stand his ground.