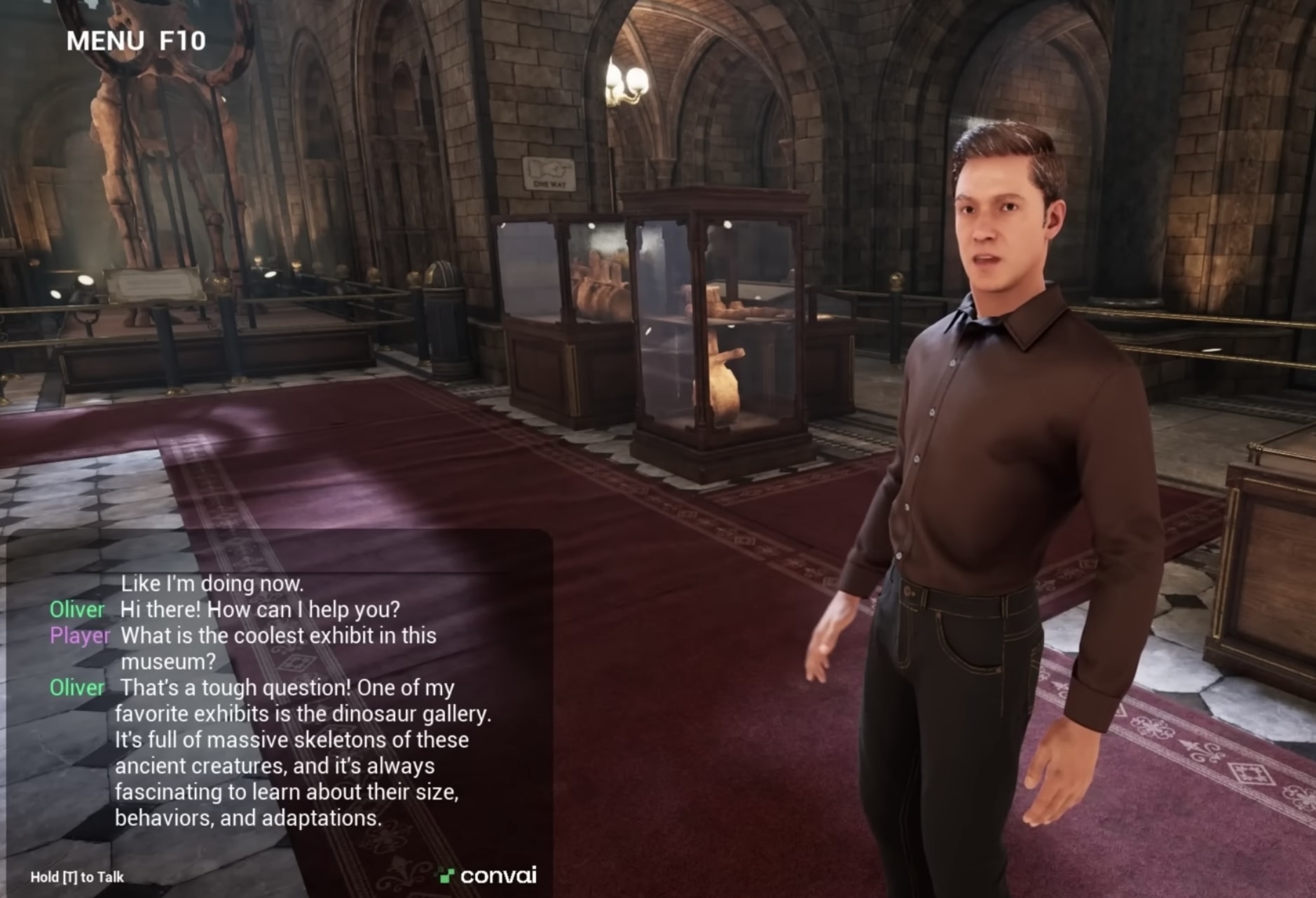

Talking with AI Characters in Realtime is Gamechanging

Matt Wolfe interviewed the CEO of Convai, the company behind the viral NVIDIA Computex game demo. They talk about how the AI character talking tool works, how to create characters, how to use it yourself, and demo it. AI will enable unique conversations and make each game play different for any player. Brian WangBrian Wang …